Lead Data Engineer (Snowflake) with English

Babel Profiles

2 days ago

Role details

Contract type

Permanent contract Employment type

Full-time (> 32 hours) Working hours

Regular working hours Languages

English Experience level

SeniorJob location

Tech stack

Artificial Intelligence

Azure

Code Review

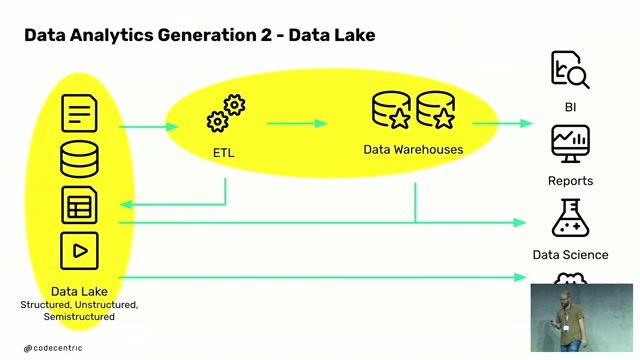

ETL

Data Transformation

Data Vault Modeling

Data Warehousing

Software Design Patterns

Interoperability

Python

Online Analytical Processing

Online Transaction Processing

Performance Tuning

Query Optimization

SQL Databases

Pulumi

Snowflake

Cloudformation

Data Lake

Information Technology

Terraform

Data Pipelines

Job description

- Lead the architecture and technical direction of the client's cloud Data Warehouse and Data Lake environment, ensuring scalable and reliable solutions built on Snowflake and DBT

- Design and implement robust data models (including Data Vault 2.0 and dimensional models), ensuring interoperability and support for analytics, reporting, and AI use cases

- Build, optimize, and maintain ELT/ETL pipelines using DBT, SQL, and Python, applying best practices for data quality, performance, automation, and monitoring

- Drive end-to-end delivery of cloud data products, from gathering requirements and defining technical designs to implementation, testing, deployment, and ongoing enhancements

- Collaborate with data product managers, analytics teams, and platform engineers to translate business needs into scalable technical solutions

- Oversee operational excellence by ensuring proper documentation, governance, performance tuning, and adherence to architectural standards

- Mentor junior engineers, conduct code reviews, and help foster a strong engineering culture focused on continuous improvement and knowledge sharing

Requirements

- Master's degree in Computer Science, Information Technology, Engineering, or a related fiel

- 8+ years of experience as a Snowflake Developer

- 10+ years of experience in data modeling (Data Vault 2.0, OLTP, OLAP)

- Expert-level knowledge of SQL and hands-on experience in Python for data transformation and automation

- Strong understanding of Data Warehouse and Data Lake concepts, architectures, and design patterns

- Practical experience building and managing data pipelines using DBT

- Familiarity with performance tuning techniques in Snowflake, including query optimization

- Hands-on experience with CI/CD pipelines, ideally with Azure DevOps

- Exposure to Infrastructure as Code tools (Terraform, CloudFormation, Pulumi, etc.)

- Experience working in regulated industries or structured delivery environments

- Strong communication skills and the ability to influence technical direction

Benefits & conditions

- Pension plan

- Life and Accident Insurance

- Location: preference for Madrid/Barcelona, but open to remote from Spain

- Health Insurance

- Restaurant Vouchers

- Employee and Family Psychological Support Program

- LinkedIn Learning Subscription: access to over 15,000 courses with certification

- Commercial discounts across hundreds of brands

- Many more (Christmas gift packages, Nursery vouchers, etc.)

Our recruitment process?

- Step 1: Interview with our Recruiter to get to know you better

- Step 2: Screening with HR team

- Step 3: Interview with the Hiring Manager

- Step 4:Technical interview

About the company

We are working with a global leader in the pharmaceutical and life sciences industry, renowned for their commitment to innovation and improving patient outcomes. This German multinational with 60,000+ employees in 66 countries offers a unique opportunity to transform the world through healthcare, life sciences, and performance materials.

Our client is strengthening their cloud-driven data and analytics landscape. They are looking for a Technical Lead with deep expertise in Data Warehousing, Data Lakes, Snowflake, and DBT to guide the technical direction of their modern data platform.

In this role, you will lead the end-to-end lifecycle of cloud data products, from architecture and modeling to pipeline development, performance optimization, and automation. You will act as a hands-on Subject Matter Expert, mentoring developers and ensuring the delivery of scalable, secure, and high-quality data solutions that power analytics, reporting, and AI use cases.

This is a high-impact position for someone who enjoys designing cloud-native data platforms, driving technical excellence, and shaping data engineering best practices in a rapidly evolving environment.

Key languages

* Full Professional level of English