AWS/dbt Data Engineer

Role details

Job location

Tech stack

Job description

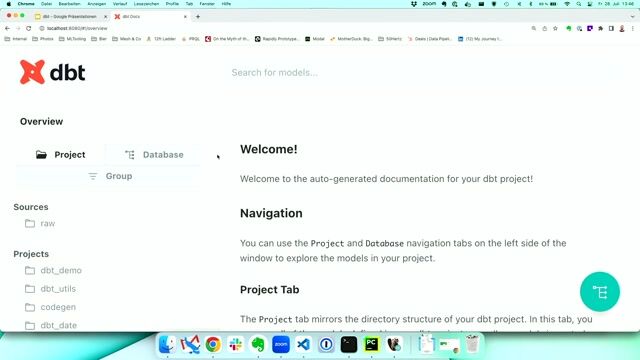

Customer is seeking a hands-on Data Engineer to design, build, and operate modern ELT/ETL workflows on AWS. The ideal candidate is exceptionally strong in SQL, data modeling (Data Vault, star/snowflake, 3NF, and data mesh concepts), and automation using DBT and shell scripting. You will collaborate with data analysts, architects, and business stakeholders to deliver reliable, governed data products that power analytics and reporting across Alternative Investments., * Data Pipelines: Design, build, and maintain robust, testable ELT/ETL pipelines on AWS (Redshift, RDS, S3, Lambda), leveraging dbt (Core/Cloud; dbt Docs; ideally Fusion engine) and Informatica where applicable.

- SQL Excellence: Write high-quality SQL (CTEs, window functions, complex joins) for transformations, data quality checks, and performance tuning, primarily targeting Redshift/RDS/Oracle/PostgreSQL.

- Data Modeling: Develop and evolve logical/physical data models (Data Vault, dimensional star/snowflake, 3NF) aligned to data mesh principles and Customer's governance standards.

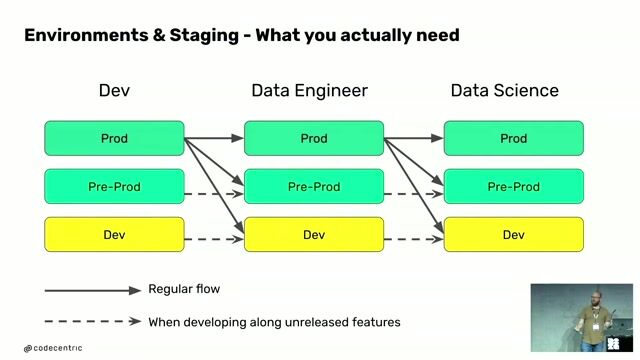

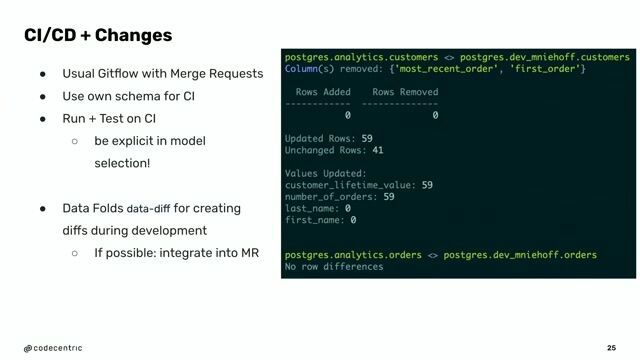

- Automation & Ops: Implement CI/CD with GitHub/GitLab; manage environments, versioning, and documentation; build scripts (bash/shell) for orchestration and housekeeping.

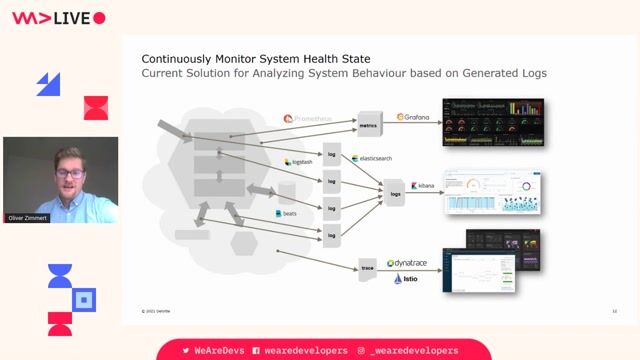

- Quality & Observability: Embed tests (dbt tests, SQL assertions), logging and monitoring fundamentals; ensure data reliability, lineage visibility, and auditable processes.

- Security & Compliance: Collaborate with IAM and platform teams to apply least-privilege access on AWS; follow internal controls and data governance policies.

- Stakeholder Collaboration: Work closely with business and analytics teams (French/English) to translate requirements into scalable data products; provide clear documentation via dbt Docs.

Requirements

Do you have experience in SQL?, * Advanced SQL: Complex queries using CTEs and window functions.

- Bash/Shell Scripting: Automation and operational scripts.

- Data Modeling: Data Vault, star/snowflake, 3NF, and data mesh concepts.

- AWS: Redshift, RDS, S3, Lambda, IAM (hands-on).

- dbt: Core & Cloud, dbt Docs; familiarity with Fusion engine preferred.

- Version Control: GitHub/GitLab (branches, PRs, code reviews).

- Informatica: Experience building/operating data pipelines.

- Databases: Oracle and PostgreSQL.

Nice to Have

- Data Migration: Experience migrating workloads/data to AWS.

- Python: Scripting for utilities, tests, small transforms

- Domain knowledge: Ideally experience in Alternative Investments (Private Assets and / or Hedge Funds)