Data Engineer (Databricks Specialist)

iO Associates

Kilsby, United Kingdom

2 days ago

Role details

Contract type

Permanent contract Employment type

Full-time (> 32 hours) Working hours

Regular working hours Languages

English Compensation

£ 143KJob location

Kilsby, United Kingdom

Tech stack

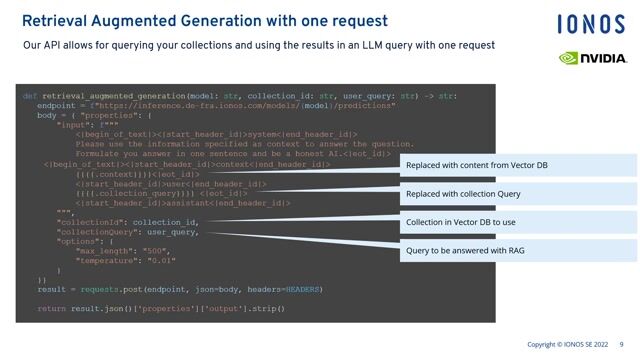

API

Amazon Web Services (AWS)

Automation of Tests

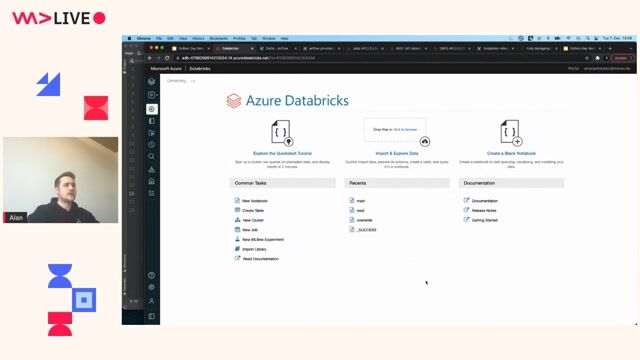

Azure

Databases

Continuous Integration

ETL

Data Transformation

Data Systems

Data Warehousing

File Systems

Python

DataOps

SQL Databases

GIT

Data Lake

PySpark

Data Pipelines

Databricks

Job description

We're seeking an experienced Databricks specialist to design, build, and optimise scalable data pipelines. You'll work closely with business and analytics teams to deliver high-quality, reliable, and well-governed data solutions, while championing modern DataOps practices. This role is remote but you must be UK based., * Build and maintain Databricks-based data pipelines (PySpark, Delta Lake, SQL).

- Integrate data from APIs, databases, and file systems.

- Work with stakeholders to translate requirements into engineering solutions.

- Ensure data quality, reliability, security, and governance.

- Implement Git, CI/CD, automated testing, and IaC.

- Optimise Databricks jobs, clusters, and workflows.

- Document processes and best practices.

Requirements

- Strong experience with Databricks, PySpark, Delta Lake, and SQL.

- Advanced Python and SQL for data transformation.

- Solid understanding of ETL/ELT, data warehousing, and distributed processing.

- Hands-on with DataOps: Git, CI/CD, testing, IaC.

- Experience with AWS, Azure, or GCP.

- Strong troubleshooting and performance optimisation skills.

The ideal candidate will be:

- Self-sufficient contractor comfortable leading technical decisions.

- Strong communicator who collaborates well with business and analytics teams.

- Delivery-focused, pragmatic, and experienced in fast-moving environments