Liran Tal

Can Machines Dream of Secure Code? Emerging AI Security Risks in LLM-driven Developer Tools

#1about 5 minutes

How simple code can hide critical vulnerabilities

A real-world NoSQL injection vulnerability in the popular Rocket.Chat project demonstrates how easily security flaws are overlooked in everyday development.

#2about 3 minutes

The evolution of how developers source their code

Developer workflows have shifted from copying code from Stack Overflow to using npm packages and now to relying on AI-generated code from tools like ChatGPT.

#3about 3 minutes

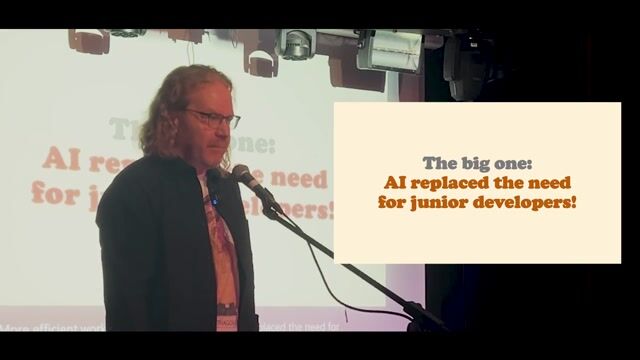

Understanding the fundamental security risks in AI models

AI models introduce unique security challenges, including data poisoning, a lack of explainability, and vulnerability to malicious user inputs.

#4about 2 minutes

When commercial chatbots are misused for coding tasks

Examples from Amazon and Expedia show how publicly exposed LLM-powered chatbots can be prompted to perform tasks far outside their intended scope, like writing code.

#5about 8 minutes

How AI code generators create common security flaws

AI tools like ChatGPT can generate functional but insecure code, introducing common vulnerabilities such as path traversal and command injection that developers might miss.

#6about 3 minutes

AI suggestions can create software supply chain risks

LLMs may hallucinate non-existent packages or recommend outdated libraries, creating opportunities for attackers to publish malicious packages and initiate supply chain attacks.

#7about 8 minutes

Context-blind vulnerabilities from IDE coding assistants

AI coding assistants can generate correct-looking but contextually insecure code, such as using the wrong sanitization method for HTML attributes, leading to XSS vulnerabilities.

#8about 1 minute

How AI assistants amplify insecure coding patterns

AI coding tools learn from the existing project codebase, meaning they will replicate and amplify any insecure patterns or bad practices already present.

#9about 1 minute

Mitigating AI risks with security tools and awareness

To counter AI-generated vulnerabilities, developers should use resources like the OWASP Top 10 for LLMs and integrate security scanning tools directly into their IDE.

Related jobs

Jobs that call for the skills explored in this talk.

IGEL Technology GmbH

Bremen, Germany

Senior

Java

IT Security

VECTOR Informatik

Stuttgart, Germany

Senior

Java

IT Security

Matching moments

03:35 MIN

Understanding AI security risks for developers

The AI Security Survival Guide: Practical Advice for Stressed-Out Developers

01:59 MIN

How AI coding assistants impact developer skills

Navigating the Future of Junior Developers in Tech

01:59 MIN

The limitations and security risks of AI-generated code

Navigating the Future of Junior Developers in Tech

06:09 MIN

Understanding the security risks of AI-generated code

Exploring AI: Opportunities and Risks in Development

05:11 MIN

Research shows GenAI tools frequently generate insecure code

The transformative impact of GenAI for software development and its implications for cybersecurity

03:19 MIN

The overlooked security risks of AI and LLMs

WeAreDevelopers LIVE - Chrome for Sale? Comet - the upcoming perplexity browser Stealing and leaking

05:47 MIN

Understanding the security risks of AI-generated code

WeAreDevelopers LIVE – Building on Algorand: Real Projects and Developer Tools

08:21 MIN

Uncovering the security risks in AI-generated code

WeAreDevelopers LIVE - Is AI replacing developers?, Stopping bots, AI on device & more

Featured Partners

Related Videos

47:35

47:35Panel discussion: Developing in an AI world - are we all demoted to reviewers? WeAreDevelopers WebDev & AI Day March2025

Laurie Voss, Rey Bango, Hannah Foxwell, Rizel Scarlett & Thomas Steiner

21:01

21:01Let’s write an exploit using AI

Julian Totzek-Hallhuber

35:01

35:01Exploring AI: Opportunities and Risks in Development

Angie Jones, Kent C Dobbs, Liran Tal & Chris Heilmann

58:06

58:06From Syntax to Singularity: AI’s Impact on Developer Roles

Anna Fritsch-Weninger

24:33

24:33GenAI Security: Navigating the Unseen Iceberg

Maish Saidel-Keesing

30:24

30:24ChatGPT: Create a Presentation!

Markus Walker

23:50

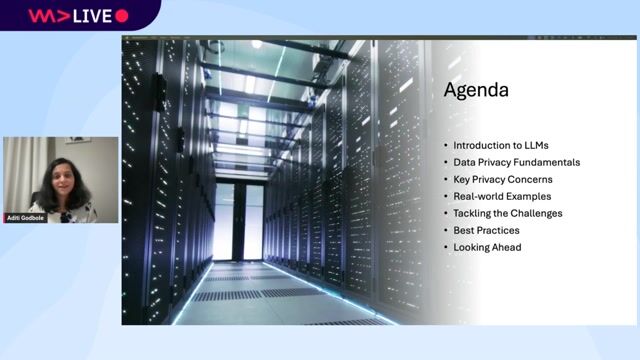

23:50Data Privacy in LLMs: Challenges and Best Practices

Aditi Godbole

30:36

30:36The AI Security Survival Guide: Practical Advice for Stressed-Out Developers

Mackenzie Jackson

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Odido

The Hague, Netherlands

Intermediate

API

Azure

Flask

Python

Docker

+3

Abnormal AI

Intermediate

API

Spark

Kafka

Python

MedAscend

Killin, United Kingdom

Remote

£52K

Senior

API

React

Docker

+4

Client Server

Charing Cross, United Kingdom

Remote

£85-100K

Senior

API

React

Python

+5

Jordan Martorell S.L.

Barcelona, Spain

Remote