Mackenzie Jackson

The AI Security Survival Guide: Practical Advice for Stressed-Out Developers

#1about 4 minutes

Understanding AI security risks for developers

AI is now part of the software supply chain, and instruction-tuned LLMs like ChatGPT introduce risks when developers trust generated code they don't fully understand.

#2about 2 minutes

How LLM training data impacts code quality

LLMs are often trained on vast, unfiltered datasets like the Common Crawl, which includes public GitHub repositories and Stack Overflow posts of varying quality.

#3about 6 minutes

Understanding and demonstrating prompt injection attacks

Prompt injection uses malicious language to bypass an AI's instructions, as shown in a demo where a simple command hijacks a text summarizer app.

#4about 3 minutes

Attacking an AI email assistant with prompt injection

A malicious email containing a hidden prompt can compromise an AI email assistant, causing it to add malicious links or exfiltrate data without user interaction.

#5about 2 minutes

Strategies for mitigating prompt injection vulnerabilities

Defend against prompt injection by using third-party security agents to analyze I/O or implementing a multi-LLM architecture with privileged and quarantined models.

#6about 6 minutes

Exploiting AI with package hallucination squatting

AI models can invent non-existent software packages, which attackers then create as malicious decoys to trick developers into installing malware via hallucination squatting.

#7about 5 minutes

How attackers use AI to refactor exploits

Attackers use purpose-built malicious AI models to refactor old exploits, making them effective again, and to create highly convincing spearphishing campaigns.

#8about 2 minutes

Preventing sensitive data leakage into AI models

Employees often paste sensitive information like API keys into public AI models, creating a risk of data leakage and enabling attackers to extract secrets.

#9about 2 minutes

Final advice on adopting AI tools securely

Instead of banning AI tools, which creates shadow IT risks, focus on developer education, using the right tools for the job, and reinforcing security fundamentals.

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

IGEL Technology GmbH

Bremen, Germany

Senior

Java

IT Security

Matching moments

01:18 MIN

Exploring AI vulnerabilities and deepfake detection claims

AI Space Factories, Hacking Self-Driving Cars & Detecting Deepfakes

09:15 MIN

Navigating the new landscape of AI and cybersecurity

From Monolith Tinkering to Modern Software Development

09:15 MIN

The complex relationship between AI and cybersecurity

Panel: How AI is changing the world of work

07:10 MIN

Managing the fear, accountability, and risks of AI

Collaborative Intelligence: The Human & AI Partnership

03:19 MIN

The overlooked security risks of AI and LLMs

WeAreDevelopers LIVE - Chrome for Sale? Comet - the upcoming perplexity browser Stealing and leaking

02:17 MIN

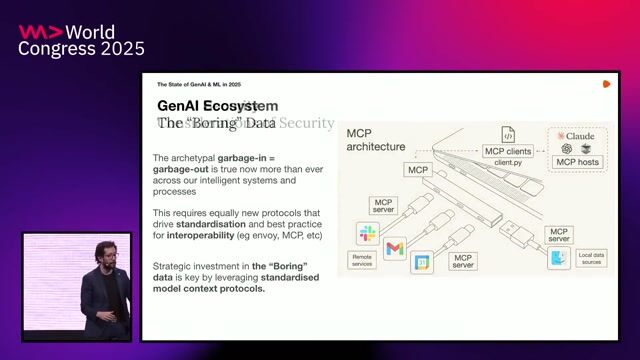

New security vulnerabilities and monitoring for AI systems

The State of GenAI & Machine Learning in 2025

01:51 MIN

Final advice on security and responsible AI usage

WeAreDevelopers LIVE - Chrome for Sale? Comet - the upcoming perplexity browser Stealing and leaking

06:10 MIN

Mitigating the security risks of AI-generated code

Developer Productivity Using AI Tools and Services - Ryan J Salva

Featured Partners

Related Videos

24:23

24:23A hundred ways to wreck your AI - the (in)security of machine learning systems

Balázs Kiss

29:00

29:00Beyond the Hype: Building Trustworthy and Reliable LLM Applications with Guardrails

Alex Soto

25:17

25:17AI: Superhero or Supervillain? How and Why with Scott Hanselman

Scott Hanselman

27:02

27:02Hacking AI - how attackers impose their will on AI

Mirko Ross

35:37

35:37Can Machines Dream of Secure Code? Emerging AI Security Risks in LLM-driven Developer Tools

Liran Tal

23:24

23:24Prompt Injection, Poisoning & More: The Dark Side of LLMs

Keno Dreßel

27:26

27:26Staying Safe in the AI Future

Cassie Kozyrkov

24:33

24:33GenAI Security: Navigating the Unseen Iceberg

Maish Saidel-Keesing

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Abnormal AI

Intermediate

API

Spark

Kafka

Python

Escape Velocity Entertainment Inc

Remote

C++

Python

PyTorch

TensorFlow

+1