Ashish Sharma

Building Blocks of RAG: From Understanding to Implementation

#1about 2 minutes

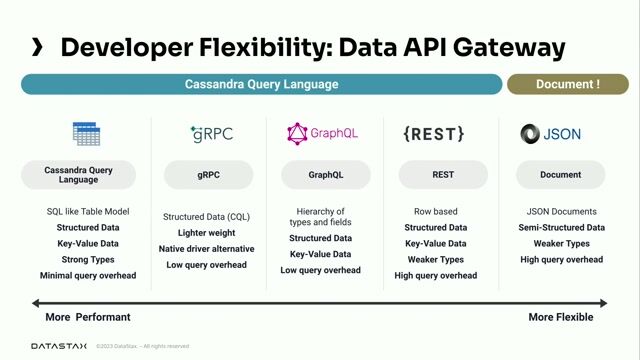

Tech stack for building a RAG application

The core technologies used for the RAG implementation include Python, Groq for LLM inference, LangChain as a framework, FAISS for the vector database, and Streamlit for the UI.

#2about 1 minute

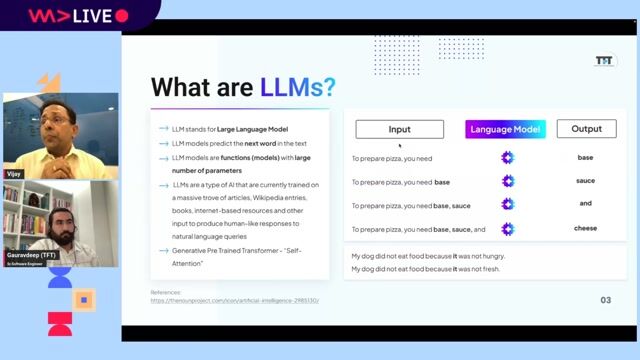

Understanding the fundamentals of large language models

Large language models are deep learning models pre-trained on vast data, using a transformer architecture with an encoder and decoder to understand and generate human-like text.

#3about 3 minutes

The rapid evolution and adoption of LLMs

The journey of LLMs has accelerated from the 2022 ChatGPT launch to widespread experimentation in 2023 and enterprise production adoption in 2024.

#4about 2 minutes

Key challenges of LLMs like hallucination

Standard LLMs face significant challenges including hallucination, unverifiable sources, and knowledge cutoffs that limit their reliability for enterprise use.

#5about 1 minute

How RAG solves LLM limitations

Retrieval-Augmented Generation addresses LLM weaknesses by retrieving relevant, up-to-date information from external data sources to provide accurate and verifiable responses.

#6about 4 minutes

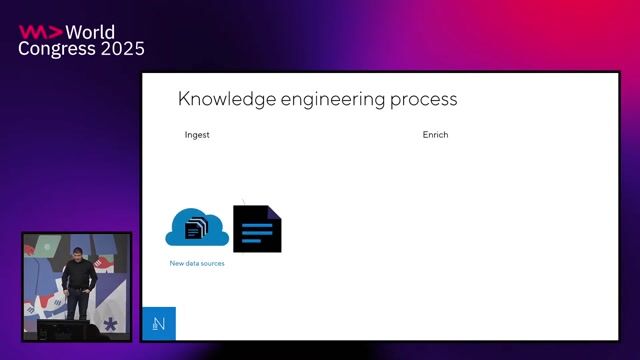

The data ingestion and processing pipeline

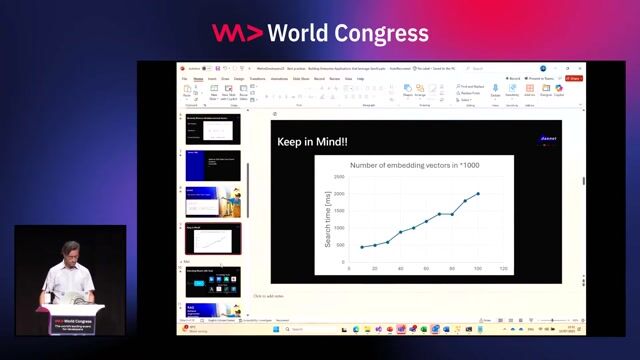

The first stage of RAG involves loading documents, splitting them into manageable chunks, converting those chunks into numerical embeddings, and storing them in a vector database.

#7about 2 minutes

The retrieval and generation process

The second stage of RAG handles user queries by retrieving relevant chunks from the vector store, constructing a detailed prompt with that context, and sending it to the LLM for generation.

#8about 4 minutes

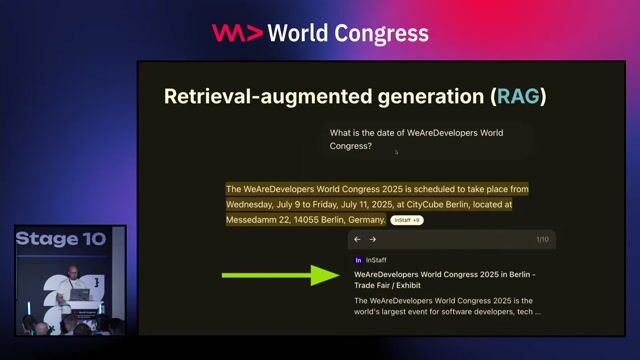

Visualizing the end-to-end RAG architecture

A complete RAG system processes a user's query by creating an embedding, finding similar document chunks in the vector DB, and feeding both the query and context to an LLM to generate a grounded response.

#9about 5 minutes

Demo of a RAG-powered document chatbot

A live demonstration shows a Streamlit application that allows users to upload a PDF and ask questions, receiving answers grounded in the document's content.

#10about 2 minutes

Summary and deploying RAG solutions

A recap of the RAG process is provided, along with considerations for deploying these solutions in enterprise environments using managed cloud services or open-source models.

Related jobs

Jobs that call for the skills explored in this talk.

Matching moments

07:24 MIN

Introducing retrieval-augmented generation (RAG)

Martin O'Hanlon - Make LLMs make sense with GraphRAG

06:05 MIN

Understanding Retrieval-Augmented Generation (RAG)

Graphs and RAGs Everywhere... But What Are They? - Andreas Kollegger - Neo4j

15:49 MIN

Understanding retrieval-augmented generation (RAG)

Exploring LLMs across clouds

01:32 MIN

How RAG provides LLMs with up-to-date context

How to scrape modern websites to feed AI agents

12:50 MIN

Using RAG to extend LLM knowledge without retraining

Best practices: Building Enterprise Applications that leverage GenAI

08:26 MIN

Powering real-time AI with retrieval augmented generation

Scrape, Train, Predict: The Lifecycle of Data for AI Applications

15:24 MIN

Implementing the Retrieval-Augmented Generation (RAG) pattern

Develop AI-powered Applications with OpenAI Embeddings and Azure Search

00:57 MIN

Why large language models need retrieval augmented generation

Build RAG from Scratch

Featured Partners

Related Videos

28:04

28:04Build RAG from Scratch

Phil Nash

21:17

21:17Carl Lapierre - Exploring Advanced Patterns in Retrieval-Augmented Generation

Carl Lapierre

29:11

29:11Large Language Models ❤️ Knowledge Graphs

Michael Hunger

58:00

58:00Creating Industry ready solutions with LLM Models

Vijay Krishan Gupta & Gauravdeep Singh Lotey

24:42

24:42Martin O'Hanlon - Make LLMs make sense with GraphRAG

Martin O'Hanlon

28:20

28:20Building Real-Time AI/ML Agents with Distributed Data using Apache Cassandra and Astra DB

Dieter Flick

26:54

26:54Make it simple, using generative AI to accelerate learning

Duan Lightfoot

28:17

28:17RAG like a hero with Docling

Alex Soto & Markus Eisele

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Agentic AI Architect - Python, LLMs & NLP

FRG Technology Consulting

Intermediate

Azure

Python

Machine Learning

R&D AI Software Engineer / End-to-End Machine Learning Engineer / RAG and LLM

Pathway

Paris, France

Remote

€72-75K

GIT

Python

Unit Testing

+2

Machine Learning Engineer - Large Language Models (LLM) - Startup

Startup

Charing Cross, United Kingdom

PyTorch

Machine Learning

Senior Data Scientist: Generative AI & MLOps (Remote)

MBR Partners

Municipality of Madrid, Spain

Remote

Python

Machine Learning

Conversational AI & Machine Learning Engineer

Deloitte

Leipzig, Germany

Azure

DevOps

Python

Docker

PyTorch

+6