Ekaterina Sirazitdinova

Multimodal Generative AI Demystified

#1about 2 minutes

The shift from specialized AI to multimodal foundation models

Traditional specialized AI models like CNNs are not sustainable for general intelligence, leading to the rise of multimodal foundation models trained on internet-scale data.

#2about 3 minutes

Demonstrating the power of multimodal models like GPT-4

GPT-4 achieves high accuracy on zero-shot tasks and shows substantial performance gains by incorporating vision, even enabling it to reason about humor in images.

#3about 7 minutes

How multimodal generative AI is transforming industries

Generative AI offers practical applications across education, healthcare, engineering, and entertainment, from personalized learning to interactive virtual characters.

#4about 2 minutes

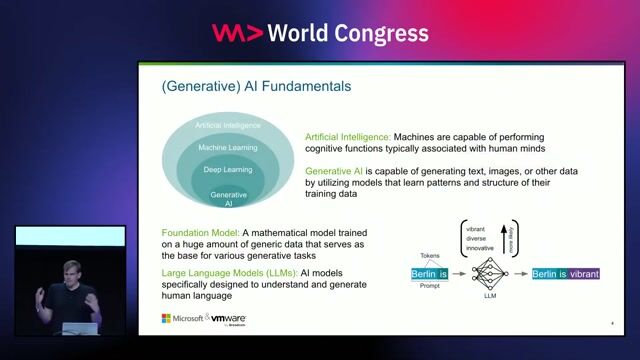

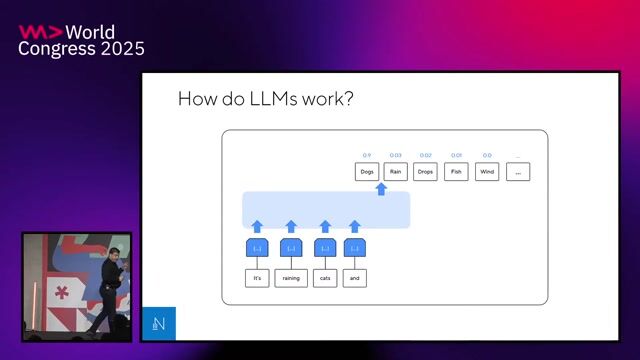

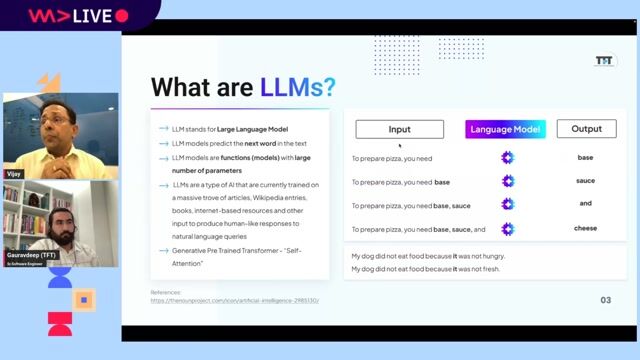

Understanding the core concepts of generative AI

Generative AI creates new content by learning patterns from existing data using a foundation model, which is a large transformer trained to predict the next element in a sequence.

#5about 7 minutes

A technical breakdown of the transformer architecture

The transformer architecture processes text by converting it into numerical embeddings and uses self-attention layers in its encoder-decoder structure to understand context.

#6about 3 minutes

An introduction to diffusion models for image generation

Modern image generation relies on diffusion models, which create high-quality images by learning to progressively remove noise from a random starting point.

#7about 3 minutes

Fine-tuning diffusion models for custom subjects and styles

Diffusion models can be fine-tuned on a small set of images to generate new content featuring a specific person, object, or artistic style.

#8about 5 minutes

The core components of text-to-image generation pipelines

Text-to-image models use a U-Net architecture to predict noise and a variational autoencoder to work efficiently in a compressed latent space.

#9about 3 minutes

Using CLIP to guide image generation with text prompts

Models like CLIP align text and image data into a shared embedding space, allowing text prompts to guide the diffusion process for controlled image generation.

#10about 3 minutes

Exploring advanced use cases and Nvidia's eDiff-I model

Image generation enables applications like synthetic asset creation and super-resolution, with models like Nvidia's eDiff-I focusing on high-quality, bias-free results.

Related jobs

Jobs that call for the skills explored in this talk.

CARIAD

Berlin, Germany

Junior

Intermediate

Python

C++

+1

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

Matching moments

04:05 MIN

Understanding the fundamental shift to generative AI

Your Next AI Needs 10,000 GPUs. Now What?

05:35 MIN

Understanding how generative AI models create content

The shadows that follow the AI generative models

05:32 MIN

GenAI applications and emerging professional roles

Enter the Brave New World of GenAI with Vector Search

03:10 MIN

An overview of generative AI and its capabilities

Make it simple, using generative AI to accelerate learning

02:00 MIN

Understanding the fundamentals of generative AI for developers

Java Meets AI: Empowering Spring Developers to Build Intelligent Apps

07:44 MIN

Defining key GenAI concepts like GPT and LLMs

Enter the Brave New World of GenAI with Vector Search

04:06 MIN

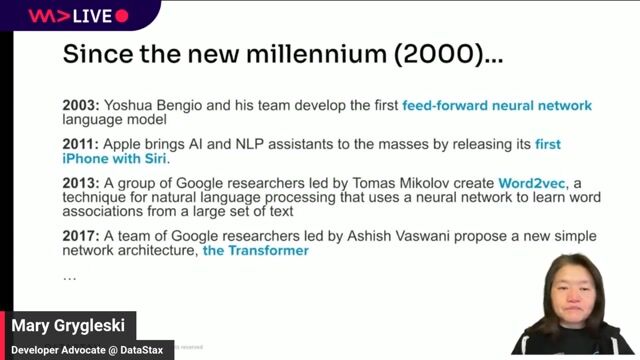

The recent evolution of generative AI models

Enter the Brave New World of GenAI with Vector Search

03:38 MIN

Tracing the evolution from LLMs to agentic AI

Exploring LLMs across clouds

Featured Partners

Related Videos

59:39

59:39Building Products in the era of GenAI

Julian Joseph

47:28

47:28What do language models really learn

Tanmay Bakshi

35:16

35:16How AI Models Get Smarter

Ankit Patel

30:38

30:38In the Dawn of the AI: Understanding and implementing AI-generated images

Timo Zander

30:02

30:02The AI Elections: How Technology Could Shape Public Sentiment

Martin Förtsch & Thomas Endres

30:24

30:24ChatGPT: Create a Presentation!

Markus Walker

58:00

58:00Creating Industry ready solutions with LLM Models

Vijay Krishan Gupta & Gauravdeep Singh Lotey

57:52

57:52Develop AI-powered Applications with OpenAI Embeddings and Azure Search

Rainer Stropek

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Advanced Group

München, Germany

Remote

API

C++

Python

OpenGL

+6

Datashift

Mechelen, Belgium

Intermediate

Azure

Python

PyTorch

TensorFlow

Machine Learning

+1

Liganova Gmbh

Frankfurt am Main, Germany

API

Python

JavaScript

TypeScript

Machine Learning

Escape Velocity Entertainment Inc

Remote

C++

Python

PyTorch

TensorFlow

+1

Apple

Zürich, Switzerland

Python

PyTorch

Machine Learning

Apple

Zürich, Switzerland

Python

PyTorch

Machine Learning