Lucia Cerchie

Let's Get Started With Apache Kafka® for Python Developers

#1about 3 minutes

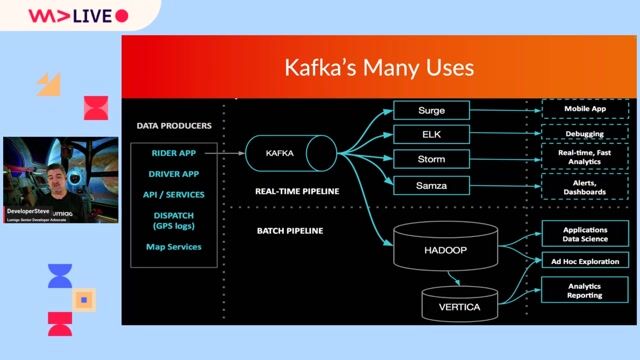

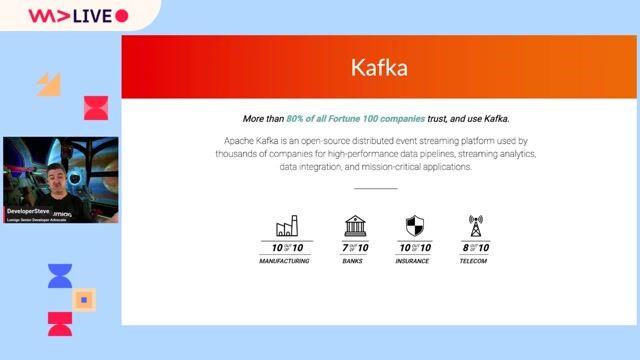

Understanding the purpose and core use cases of Kafka

Apache Kafka is an event streaming platform designed for high-throughput, real-time data feeds like event-driven applications and clickstream analysis.

#2about 2 minutes

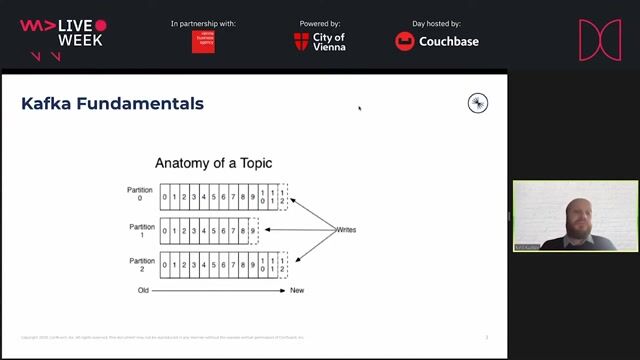

Exploring Kafka's core concepts of events, topics, and partitions

Events are organized into logical groupings called topics, which use an immutable log data structure split into partitions for scalability.

#3about 2 minutes

Understanding the roles of producers and consumers

Producers write events to topic partitions based on a key, while consumers read from topics and can be organized into groups to share workloads.

#4about 4 minutes

Building a real-time Kafka producer and consumer in Python

A code walkthrough demonstrates how to use the confluent-kafka library to create a producer that sends click events and a consumer that reads them in real time.

#5about 4 minutes

Navigating the Kafka ecosystem and the power of community

The broad Kafka ecosystem includes tools like k-cat and KIPs, and leveraging developer communities is key to overcoming learning challenges.

#6about 1 minute

Recapping Kafka's capabilities for real-time data feeds

A summary reinforces how Kafka's distributed nature and use of partitions enable a high-throughput, low-latency solution for real-time data.

#7about 23 minutes

Answering questions on Kafka use cases, careers, and learning

The Q&A covers real-world applications like fraud detection, decoupling microservices, the difference between Apache and Confluent Kafka, and learning resources.

Related jobs

Jobs that call for the skills explored in this talk.

Matching moments

06:10 MIN

Core concepts of Apache Kafka for event streaming

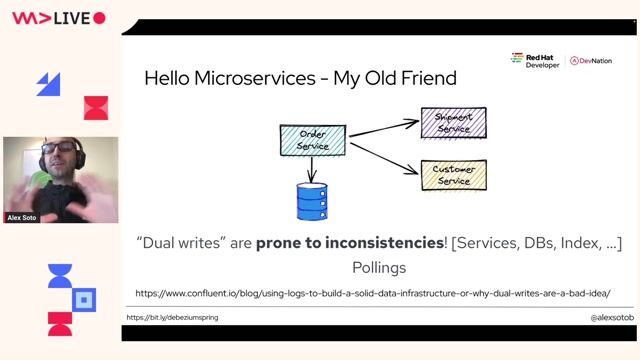

Practical Change Data Streaming Use Cases With Debezium And Quarkus

03:46 MIN

A brief overview of Apache Kafka architecture

How to Benchmark Your Apache Kafka

02:40 MIN

Core concepts of Kafka and Kafka Streams

Kafka Streams Microservices

08:50 MIN

Live demo setup for debugging Kafka

Tips, Techniques, and Common Pitfalls Debugging Kafka

01:24 MIN

Understanding Kafka's role in modern architectures

Tips, Techniques, and Common Pitfalls Debugging Kafka

02:46 MIN

Getting started with Kafka in Python

Tips, Techniques, and Common Pitfalls Debugging Kafka

02:03 MIN

The growing role of Python in real-time data processing

Python-Based Data Streaming Pipelines Within Minutes

01:47 MIN

Real-world Kafka use cases at scale

Tips, Techniques, and Common Pitfalls Debugging Kafka

Featured Partners

Related Videos

54:29

54:29Tips, Techniques, and Common Pitfalls Debugging Kafka

DeveloperSteve

35:27

35:27How to Benchmark Your Apache Kafka

Kirill Kulikov

39:04

39:04Python-Based Data Streaming Pipelines Within Minutes

Bobur Umurzokov

52:15

52:15Practical Change Data Streaming Use Cases With Debezium And Quarkus

Alex Soto

46:43

46:43Convert batch code into streaming with Python

Bobur Umurzokov

45:48

45:48Kafka Streams Microservices

Denis Washington & Olli Salonen

24:53

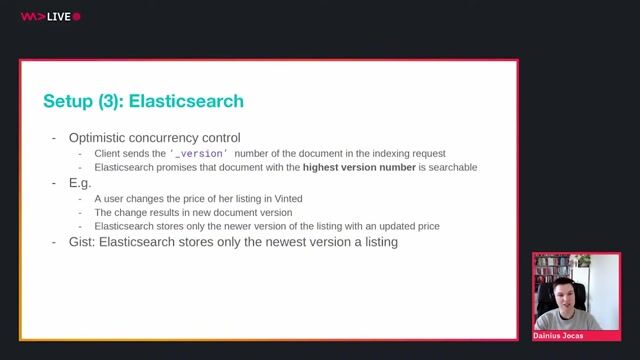

24:53Don't Change the Partition Count for Kafka Topics!

Dainius Jocas

30:51

30:51Why and when should we consider Stream Processing frameworks in our solutions

Soroosh Khodami

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Kirtana Consulting

Charing Cross, United Kingdom

Senior

Java

Azure

Kafka

DevOps

Python

+7

Vbv Recruiting

Zürich, Switzerland

Remote

Senior

Kafka

KJR Software Services Ltd

Reading, United Kingdom

Junior

Java

NoSQL

Kafka

DevOps

Docker

+10

Rigobeert Cremers

Ghent, Belgium

Intermediate

API

Java

Azure

Kafka

Docker

+5

Delivery Hero AG

Berlin, Germany

Kafka

Python

Kotlin

Microservices

Machine Learning