Nura Kawa

Machine Learning: Promising, but Perilous

#1about 5 minutes

The dual nature of machine learning's power

Machine learning's increasing power and accessibility, exemplified by complex tasks like panoptic segmentation, also introduces significant security vulnerabilities.

#2about 3 minutes

Accelerating development with transfer learning

Transfer learning allows developers to repurpose large pre-trained teacher models for specific tasks with minimal data and compute by fine-tuning a new student model.

#3about 2 minutes

How transfer learning's benefits create security risks

The core benefits of transfer learning, such as knowledge transfer and minimal training, directly create attack vectors for adversaries.

#4about 5 minutes

Exploring evasion and poisoning attacks in ML

Adversarial examples can fool models with subtle input changes (evasion), while poisoned data can insert hidden backdoors, with both risks amplified by transfer learning.

#5about 3 minutes

Integrating security with a pre-development risk assessment

Before writing code, perform a thorough risk assessment by defining security requirements, evaluating resource availability, and conducting threat modeling for your specific use case.

#6about 3 minutes

Selecting robust teacher models for secure transfer learning

Mitigate risks by choosing transparent and trustworthy teacher models and using robust models hardened through techniques like adversarial training.

#7about 1 minute

Fortifying student models to prevent transferred attacks

Strengthen your student model by fine-tuning all layers to diverge from the teacher model, using backdoor detection, and performing continuous stress testing.

#8about 2 minutes

Key resources for developing secure ML systems

Practical resources like the Adversarial Robustness Toolbox for developers and security principles from the National Cybersecurity Center can help you build more secure ML systems.

Related jobs

Jobs that call for the skills explored in this talk.

Featured Partners

Related Videos

24:23

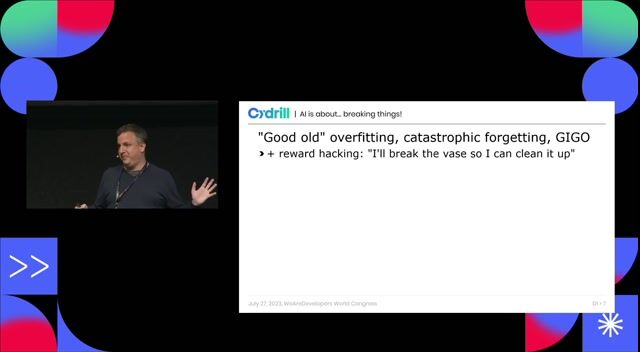

24:23A hundred ways to wreck your AI - the (in)security of machine learning systems

Balázs Kiss

27:02

27:02Hacking AI - how attackers impose their will on AI

Mirko Ross

48:01

48:01What non-automotive Machine Learning projects can learn from automotive Machine Learning projects

Jan Zawadzki

29:00

29:00Beyond the Hype: Building Trustworthy and Reliable LLM Applications with Guardrails

Alex Soto

35:16

35:16How AI Models Get Smarter

Ankit Patel

27:26

27:26Staying Safe in the AI Future

Cassie Kozyrkov

23:24

23:24Prompt Injection, Poisoning & More: The Dark Side of LLMs

Keno Dreßel

24:33

24:33GenAI Security: Navigating the Unseen Iceberg

Maish Saidel-Keesing

From learning to earning

Jobs that call for the skills explored in this talk.

Security-by-Design for Trustworthy Machine Learning Pipelines

Association Bernard Gregory

Machine Learning

Continuous Delivery

Machine Learning (IA/ML) + DevOps (MLOps)

Alten

Municipality of Madrid, Spain

Remote

Java

DevOps

Python

Kubernetes

+3

Machine Learning (ML) Engineer Maitrisant - Python / Github Action

ASFOTEC

Canton de Lille-6, France

Intermediate

NoSQL

Kafka

DevOps

Python

Pandas

+11

Machine Learning Engineer

Speechmatics

Charing Cross, United Kingdom

Remote

€39K

Machine Learning

Speech Recognition

Machine Learning Engineer

Machine Learning Engineerwrk Digital Ltd

Leeds, United Kingdom

€77K

NumPy

SciPy

DevOps

Pandas

+4