Balázs Kiss

A hundred ways to wreck your AI - the (in)security of machine learning systems

#1about 4 minutes

The security risks of AI-generated code

AI systems can generate code quickly but may introduce vulnerabilities or rely on outdated practices, highlighting that all AI systems are fundamentally code and can be exploited.

#2about 5 minutes

Fundamental AI vulnerabilities and malicious misuse

AI systems are prone to classic failures like overfitting and can be maliciously manipulated through deepfakes, chatbot poisoning, and adversarial patterns.

#3about 1 minute

Exploring threat modeling frameworks for AI security

Several organizations like OWASP, NIST, and MITRE provide threat models and standards to help developers understand and mitigate AI security risks.

#4about 6 minutes

Deconstructing AI attacks from evasion to model stealing

Attack trees categorize novel threats like evasion with adversarial samples, data poisoning to create backdoors, and model stealing to replicate proprietary systems.

#5about 2 minutes

Demonstrating an adversarial attack on digit recognition

A live demonstration shows how pre-generated adversarial samples can trick a digit recognition model into misclassifying numbers as zero.

#6about 5 minutes

Analyzing supply chain and framework security risks

Security risks extend beyond the model to the supply chain, including backdoors in pre-trained models, insecure serialization formats like Pickle, and vulnerabilities in ML frameworks.

#7about 1 minute

Choosing secure alternatives to the Pickle model format

The HDF5 format is recommended as a safer, industry-standard alternative to Python's insecure Pickle format for serializing machine learning models.

Related jobs

Jobs that call for the skills explored in this talk.

CARIAD

Berlin, Germany

Junior

Intermediate

Python

C++

+1

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

Matching moments

02:17 MIN

New security vulnerabilities and monitoring for AI systems

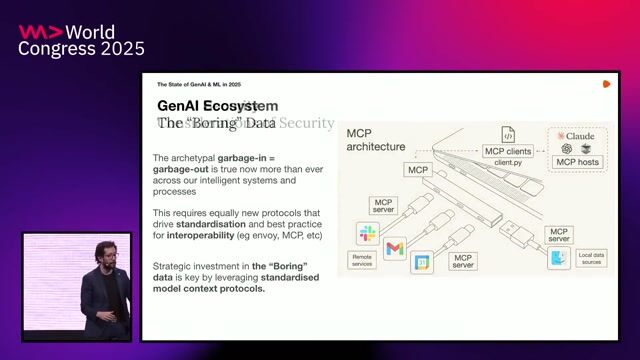

The State of GenAI & Machine Learning in 2025

09:15 MIN

Navigating the new landscape of AI and cybersecurity

From Monolith Tinkering to Modern Software Development

09:15 MIN

The complex relationship between AI and cybersecurity

Panel: How AI is changing the world of work

03:28 MIN

Understanding the fundamental security risks in AI models

Can Machines Dream of Secure Code? Emerging AI Security Risks in LLM-driven Developer Tools

04:53 MIN

The dual nature of machine learning's power

Machine Learning: Promising, but Perilous

01:18 MIN

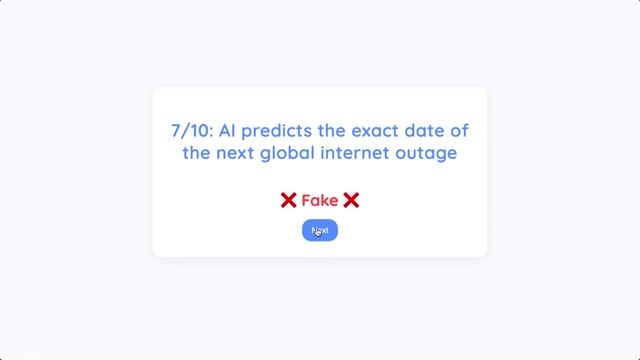

Exploring AI vulnerabilities and deepfake detection claims

AI Space Factories, Hacking Self-Driving Cars & Detecting Deepfakes

04:09 MIN

Understanding the current state of AI security challenges

Delay the AI Overlords: How OAuth and OpenFGA Can Keep Your AI Agents from Going Rogue

03:35 MIN

Understanding AI security risks for developers

The AI Security Survival Guide: Practical Advice for Stressed-Out Developers

Featured Partners

Related Videos

27:19

27:19Machine Learning: Promising, but Perilous

Nura Kawa

27:02

27:02Hacking AI - how attackers impose their will on AI

Mirko Ross

31:19

31:19Skynet wants your Passwords! The Role of AI in Automating Social Engineering

Wolfgang Ettlinger & Alexander Hurbean

35:16

35:16How AI Models Get Smarter

Ankit Patel

27:26

27:26Staying Safe in the AI Future

Cassie Kozyrkov

30:36

30:36The AI Security Survival Guide: Practical Advice for Stressed-Out Developers

Mackenzie Jackson

30:02

30:02The AI Elections: How Technology Could Shape Public Sentiment

Martin Förtsch & Thomas Endres

27:32

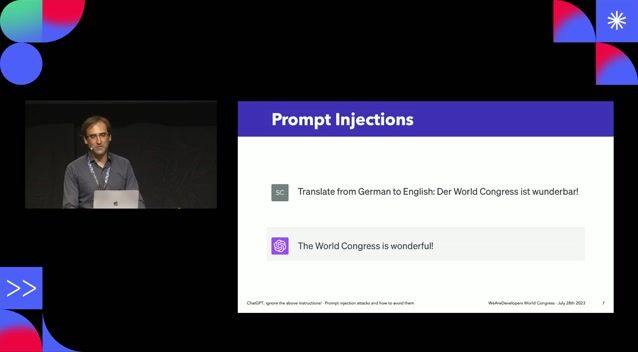

27:32ChatGPT, ignore the above instructions! Prompt injection attacks and how to avoid them.

Sebastian Schrittwieser

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Imec

Azure

Python

PyTorch

TensorFlow

Computer Vision

+1

Agenda GmbH

Raubling, Germany

Remote

Intermediate

API

Azure

Python

Docker

+10

Client Server

Cambridge, United Kingdom

Remote

£75-85K

NumPy

PyTorch

TensorFlow

+1

Skalbach Gmbh

Böblingen, Germany

Python

Data analysis

Machine Learning