Daniel Savenkov

Beyond Autocomplete: Local AI Code Completion Demystified

#1about 6 minutes

The case for local AI code completion

While cloud-based AI offers powerful models, a local approach provides better security, lower latency, and no subscription cost by using smaller, specialized models.

#2about 4 minutes

Measuring user experience with online A/B testing

Online evaluation uses A/B testing to measure positive signals like code generation and negative signals like user annoyance to validate feature improvements.

#3about 2 minutes

Guaranteeing code correctness with semantic checks

Suggestions are validated for semantic correctness by the IDE before being shown to the user, eliminating errors like non-existent variables.

#4about 3 minutes

Using a filter model to reduce user annoyance

A secondary machine learning model predicts the probability of a suggestion being accepted, filtering out suggestions that are correct but unhelpful.

#5about 2 minutes

Implementing efficient local model inference

Using a native C++ inference engine like Llama.cpp enables fast, low-level execution of the language model directly on the user's machine.

#6about 2 minutes

Training small, specialized language models from scratch

Training small, language-specific models in-house is cost-effective and allows for extensive experimentation to optimize performance for local execution.

#7about 2 minutes

Accelerating development with offline evaluation

An offline evaluation pipeline runs the IDE in a headless mode to test hypotheses quickly, pre-selecting the most promising changes for slower A/B tests.

#8about 1 minute

Structuring the team for a local AI feature

A small, cross-functional team of 10-20 people with diverse skills is an effective structure for delivering complex AI features.

#9about 6 minutes

Key takeaways for building local AI features

Local AI is a rapidly growing field where success depends on more than just the language model, requiring a focus on security and user experience.

Related jobs

Jobs that call for the skills explored in this talk.

CARIAD

Berlin, Germany

Junior

Intermediate

Python

C++

+1

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

Matching moments

02:11 MIN

Using local AI models for code assistance

Building APIs in the AI Era

04:44 MIN

Demo: Setting up a local AI code assistant

Self-Hosted LLMs: From Zero to Inference

06:44 MIN

The developer's journey for building AI applications

Supercharge your cloud-native applications with Generative AI

00:56 MIN

Strategies for integrating local LLMs with your data

Self-Hosted LLMs: From Zero to Inference

04:02 MIN

Q&A on AI limitations and practical application

How to become an AI toolsmith

02:20 MIN

Contrasting incremental AI with AI-native development

Transforming Software Development: The Role of AI and Developer Tools

02:38 MIN

The case for running AI models locally

Prompt API & WebNN: The AI Revolution Right in Your Browser

01:43 MIN

Key benefits of local LLM deployment for developers

Self-Hosted LLMs: From Zero to Inference

Featured Partners

Related Videos

30:24

30:24ChatGPT: Create a Presentation!

Markus Walker

31:50

31:50Unlocking the Power of AI: Accessible Language Model Tuning for All

Cedric Clyburn & Legare Kerrison

31:39

31:39Livecoding with AI

Rainer Stropek

32:26

32:26Bringing the power of AI to your application.

Krzysztof Cieślak

32:28

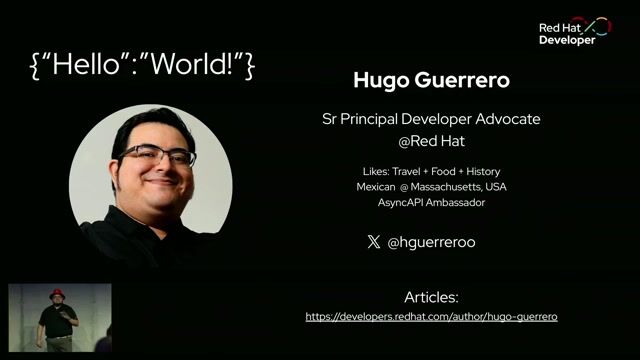

32:28Building APIs in the AI Era

Hugo Guerrero

30:04

30:04Self-Hosted LLMs: From Zero to Inference

Roberto Carratalá & Cedric Clyburn

28:07

28:07How we built an AI-powered code reviewer in 80 hours

Yan Cui

25:17

25:17AI: Superhero or Supervillain? How and Why with Scott Hanselman

Scott Hanselman

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

AKDB Anstalt für kommunale Datenverarbeitung in Bayern

Köln, Germany

DevOps

Python

Docker

Terraform

Kubernetes

+2

Imec

Azure

Python

PyTorch

TensorFlow

Computer Vision

+1

Spektrum

Braine-l'Alleud, Belgium

Remote

.NET

REST

Azure

Scrum

+10