Roberto Carratalá & Cedric Clyburn

Self-Hosted LLMs: From Zero to Inference

#1about 3 minutes

The rise of self-hosted open source AI models

Self-hosting large language models offers developers greater privacy, cost savings, and control compared to third-party cloud AI services.

#2about 2 minutes

Key benefits of local LLM deployment for developers

Running models locally improves the development inner loop, provides full data privacy, and allows for greater customization and control over the AI stack.

#3about 3 minutes

Comparing open source tools for serving LLMs

Explore different open source tools like Ollama for local development, vLLM for scalable production, and Podman AI Lab for containerized AI applications.

#4about 3 minutes

How to select the right open source LLM

Navigate the vast landscape of open source models by understanding different model families, their specific use cases, and naming conventions.

#5about 3 minutes

Using quantization to run large models locally

Model quantization compresses LLMs to reduce their memory footprint, enabling them to run efficiently on consumer hardware like laptops with CPUs or GPUs.

#6about 1 minute

Strategies for integrating local LLMs with your data

Learn three key methods for connecting local models to your data: Retrieval-Augmented Generation (RAG), local code assistants, and building agentic applications.

#7about 6 minutes

Demo: Building a RAG system with local models

Use Podman AI Lab to serve a local LLM and connect it to AnythingLLM to create a question-answering system over your private documents.

#8about 5 minutes

Demo: Setting up a local AI code assistant

Integrate a self-hosted LLM with the Continue VS Code extension to create a private, offline-capable AI pair programmer for code generation and analysis.

#9about 4 minutes

Demo: Building an agentic app with external tools

Create an agentic application that uses a local LLM with external tools via the Model Context Protocol (MCP) to perform complex, multi-step tasks.

#10about 1 minute

Conclusion and the future of open source AI

Self-hosting provides a powerful, private, and customizable alternative to third-party services, highlighting the growing potential of open source AI for developers.

Related jobs

Jobs that call for the skills explored in this talk.

ROSEN Technology and Research Center GmbH

Osnabrück, Germany

Senior

TypeScript

React

+3

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

Matching moments

01:40 MIN

Addressing data privacy and security in AI systems

Graphs and RAGs Everywhere... But What Are They? - Andreas Kollegger - Neo4j

12:42 MIN

Running large language models locally with Web LLM

Generative AI power on the web: making web apps smarter with WebGPU and WebNN

02:58 MIN

Understanding the benefits of self-hosting large language models

Unveiling the Magic: Scaling Large Language Models to Serve Millions

04:01 MIN

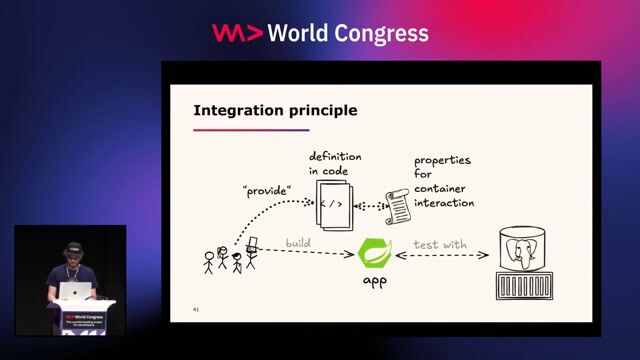

Testing Spring AI applications with local LLMs

What's (new) with Spring Boot and Containers?

04:31 MIN

Leveraging private data with local and small AI models

Decoding Trends: Strategies for Success in the Evolving Digital Domain

02:11 MIN

Using local AI models for code assistance

Building APIs in the AI Era

05:39 MIN

The benefits and challenges of running AI models locally

WeAreDevelopers LIVE - Is AI replacing developers?, Stopping bots, AI on device & more

06:44 MIN

The developer's journey for building AI applications

Supercharge your cloud-native applications with Generative AI

Featured Partners

Related Videos

31:25

31:25Unveiling the Magic: Scaling Large Language Models to Serve Millions

Patrick Koss

27:11

27:11Inside the Mind of an LLM

Emanuele Fabbiani

31:50

31:50Unlocking the Power of AI: Accessible Language Model Tuning for All

Cedric Clyburn & Legare Kerrison

28:38

28:38Exploring LLMs across clouds

Tomislav Tipurić

26:30

26:30Three years of putting LLMs into Software - Lessons learned

Simon A.T. Jiménez

30:48

30:48One AI API to Power Them All

Roberto Carratalá

42:26

42:26How to Avoid LLM Pitfalls - Mete Atamel and Guillaume Laforge

Meta Atamel & Guillaume Laforge

34:21

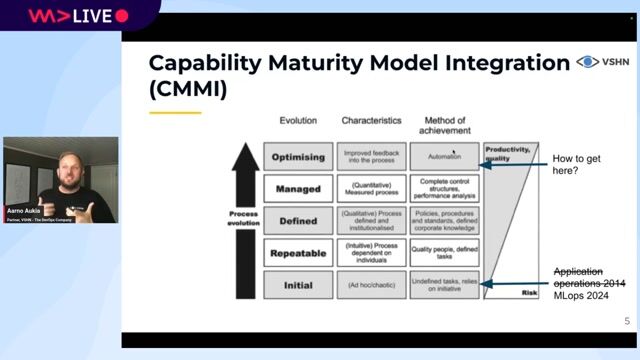

34:21DevOps for AI: running LLMs in production with Kubernetes and KubeFlow

Aarno Aukia

Related Articles

View all articles

.png?w=240&auto=compress,format)

From learning to earning

Jobs that call for the skills explored in this talk.

Odido

The Hague, Netherlands

Intermediate

API

Azure

Flask

Python

Docker

+3

Abi Global Health

Barcelona, Spain

Remote

€45-55K

Azure

Keras

PyTorch

+2

Robert Ragge GmbH

Senior

API

Python

Terraform

Kubernetes

A/B testing

+3

MedAscend

Killin, United Kingdom

Remote

£52K

Senior

API

React

Docker

+4

Abi Global Health

Barcelona, Spain

Remote

€45-55K

Azure

Keras

PyTorch

+2

Hyperproof

Municipality of Madrid, Spain

€45K

Machine Learning

Pasiona Consulting Sl

Municipality of Madrid, Spain

Remote

React

Python

Agile Methodologies

Accenture

Municipality of Madrid, Spain

Remote

Senior

GIT

DevOps

Python

Jenkins

+3