Mirko Ross

Hacking AI - how attackers impose their will on AI

#1about 2 minutes

Understanding the core principles of hacking AI systems

AI systems can be hacked by manipulating their statistical outputs through data poisoning to force the model to produce attacker-controlled results.

#2about 2 minutes

Exploring the three primary data poisoning attack methods

Attackers compromise AI systems through prompt injection, manipulating training data to create backdoors, or injecting specific patterns into a live model.

#3about 3 minutes

Why the AI industry repeats early software security mistakes

The AI industry's tendency to trust all input data, unlike the hardened practices of software development, creates significant vulnerabilities for attackers to exploit.

#4about 3 minutes

How adversarial attacks manipulate image recognition models

Adversarial attacks overlay a carefully crafted noise pattern onto an image, causing subtle mathematical changes that force a neural network to misclassify the input.

#5about 5 minutes

Applying adversarial attacks in the physical world

Adversarial patterns can be printed on physical objects like stickers or clothing to deceive AI systems, such as tricking self-driving cars or evading surveillance cameras.

#6about 2 minutes

Creating robust 3D objects for adversarial attacks

By embedding adversarial noise into a 3D model's geometry, an object can be consistently misclassified by AI from any viewing angle, as shown by a turtle identified as a rifle.

#7about 2 minutes

Techniques for defending against adversarial image attacks

Defenses against adversarial attacks involve de-poisoning input images by reducing their information level, such as lowering bit depth, to disrupt the malicious noise pattern.

#8about 4 minutes

Understanding the complexity of prompt injection attacks

Prompt injection bypasses safety filters by framing forbidden requests in complex contexts, such as asking for Python code to perform an unethical task, exploiting the model's inability to grasp the full impact.

#9about 2 minutes

The inherent bias of manual prompt injection filters

Manual content filtering in AI models introduces human bias, as demonstrated by inconsistent rules for jokes about different genders, which highlights a fundamental scaling and fairness problem.

#10about 2 minutes

Q&A on creating patterns and de-poisoning images

The Q&A covers how adversarial patterns are now AI-generated and discusses image de-poisoning techniques like autoencoders, bit depth reduction, and rotation to reduce malicious information.

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

Matching moments

01:18 MIN

Exploring AI vulnerabilities and deepfake detection claims

AI Space Factories, Hacking Self-Driving Cars & Detecting Deepfakes

03:28 MIN

Understanding the fundamental security risks in AI models

Can Machines Dream of Secure Code? Emerging AI Security Risks in LLM-driven Developer Tools

02:17 MIN

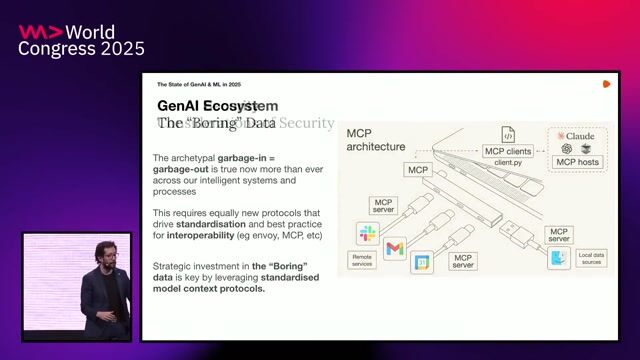

New security vulnerabilities and monitoring for AI systems

The State of GenAI & Machine Learning in 2025

09:15 MIN

The complex relationship between AI and cybersecurity

Panel: How AI is changing the world of work

09:15 MIN

Navigating the new landscape of AI and cybersecurity

From Monolith Tinkering to Modern Software Development

04:38 MIN

Fundamental AI vulnerabilities and malicious misuse

A hundred ways to wreck your AI - the (in)security of machine learning systems

03:05 MIN

Understanding security risks from adversarial attacks on models

Explainable machine learning explained

02:28 MIN

Understanding the dual role of AI in cybersecurity

Skynet wants your Passwords! The Role of AI in Automating Social Engineering

Featured Partners

Related Videos

24:23

24:23A hundred ways to wreck your AI - the (in)security of machine learning systems

Balázs Kiss

24:33

24:33GenAI Security: Navigating the Unseen Iceberg

Maish Saidel-Keesing

35:16

35:16How AI Models Get Smarter

Ankit Patel

27:19

27:19Machine Learning: Promising, but Perilous

Nura Kawa

41:06

41:06Panel: How AI is changing the world of work

Pascal Reddig, TJ Griffiths, Fabian Schmidt, Oliver Winzenried & Matthias Niehoff & Mirko Ross

30:36

30:36The AI Security Survival Guide: Practical Advice for Stressed-Out Developers

Mackenzie Jackson

28:30

28:30The shadows that follow the AI generative models

Cheuk Ho

31:19

31:19Skynet wants your Passwords! The Role of AI in Automating Social Engineering

Wolfgang Ettlinger & Alexander Hurbean

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

autonomous-teaming

München, Germany

ETL

NoSQL

NumPy

Python

Pandas

+2

Imec

Azure

Python

PyTorch

TensorFlow

Computer Vision

+1

Allianz SE

München, Germany