Stephan Gillich, Tomislav Tipurić, Christian Wiebus & Alan Southall

The Future of Computing: AI Technologies in the Exascale Era

#1about 3 minutes

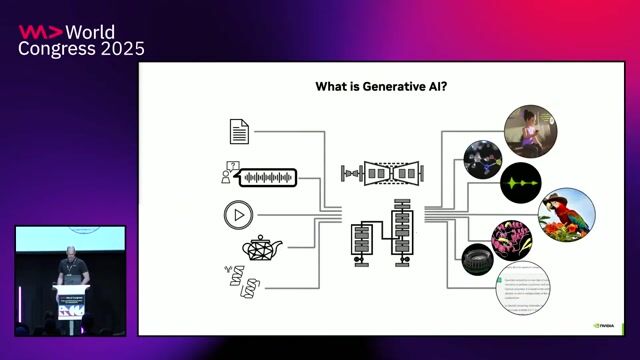

Defining exascale computing and its relevance for AI

Exascale computing, originating from high-performance computing benchmarks, offers massive floating-point operation capabilities that are highly relevant for training large AI models.

#2about 4 minutes

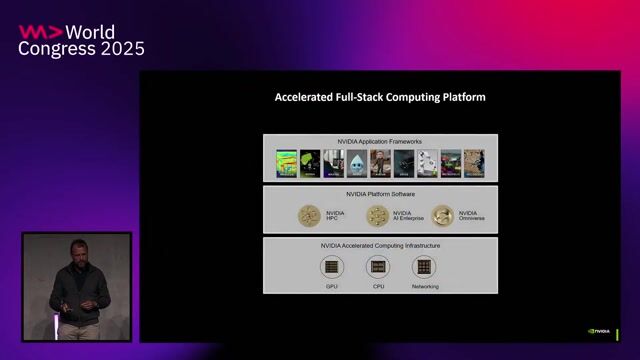

Comparing GPU and CPU architectures for deep learning

GPUs excel at AI tasks due to their specialized, parallel processing of matrix operations, while CPUs are being enhanced with features like Advanced Matrix Extensions to also handle these workloads.

#3about 2 minutes

Implementing machine learning on resource-constrained edge devices

Machine learning is becoming essential on edge devices to improve data quality and services, requiring specialized co-processors to achieve performance within strict power budgets.

#4about 4 minutes

Addressing the growing power consumption of AI computing

The massive energy demand of data centers for AI training is a major challenge, addressed by improving grid-to-core power efficiency and offloading computation to the edge.

#5about 1 minute

Key security considerations for AI systems and edge devices

Securing AI systems involves a multi-layered approach including secure boot, safe updates, certificate management, and ensuring the trustworthiness of the AI models themselves.

#6about 5 minutes

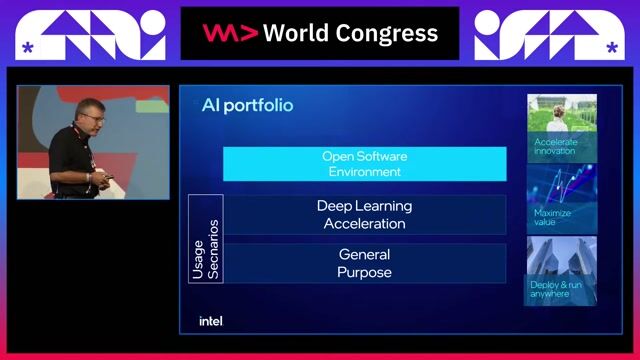

Leveraging open software and AI for code development

Open software stacks enable hardware choice, while development tools and large language models can be used to automatically optimize code for better performance on specific platforms.

#7about 8 minutes

Exploring future computing architectures and industry collaboration

The future of computing will be shaped by power efficiency challenges, leading to innovations in materials like silicon carbide, alternative architectures like neuromorphic computing, and cross-industry partnerships.

#8about 3 minutes

Balancing distributed edge AI with centralized cloud computing

A hybrid architecture that balances local processing on the edge with centralized cloud resources is the most practical approach for AI, optimizing for latency, power, and data privacy based on the specific use case.

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

Matching moments

02:51 MIN

Future trends in AI models and data center cooling

AI Factories at Scale

05:50 MIN

The future of computing requires scaling out to data centers

Coffee with Developers - Stephen Jones - NVIDIA

02:40 MIN

Lightning round on future skills and AI trends

The AI-Ready Stack: Rethinking the Engineering Org of the Future

03:19 MIN

Choosing the right hardware for different AI workloads

Bringing AI Everywhere

03:25 MIN

Achieving massive energy efficiency in AI compute

Pioneering AI Assistants in Banking

02:06 MIN

Managing AI's energy consumption with sustainable infrastructure

How to build a sovereign European AI compute infrastructure

03:08 MIN

Enabling hybrid AI with an open software stack

Bringing AI Everywhere

01:37 MIN

Introduction to large-scale AI infrastructure challenges

Your Next AI Needs 10,000 GPUs. Now What?

Featured Partners

Related Videos

26:25

26:25Bringing AI Everywhere

Stephan Gillich

29:36

29:36How to build a sovereign European AI compute infrastructure

Markus Hacker, Daniel Abbou, Rosanne Kincaid-Smith & Michael Bradley

31:05

31:05Open Source AI, To Foundation Models and Beyond

Ankit Patel, Matt White, Philipp Schmid, Lucie-Aimée Kaffee & Andreas Blattmann

35:16

35:16How AI Models Get Smarter

Ankit Patel

32:46

32:46Architecting the Future: Leveraging AI, Cloud, and Data for Business Success

Tomislav Tipurić, Christian Ertler, Marc Binder & Karin Janina Schweizer

29:50

29:50Trends, Challenges and Best Practices for AI at the Edge

Ekaterina Sirazitdinova

24:07

24:07Beyond the Hype: Real-World AI Strategies Panel

Mike Butcher, Jürgen Müller, Katrin Lehmann & Tobias Regenfuss

29:52

29:52Your Next AI Needs 10,000 GPUs. Now What?

Anshul Jindal & Martin Piercy

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Microsoft

Cambridge, United Kingdom

C++

Python

Machine Learning

BWI GmbH

München, Germany

Senior

Linux

DevOps

Python

Ansible

Terraform

+1

AKDB Anstalt für kommunale Datenverarbeitung in Bayern

Köln, Germany

DevOps

Python

Docker

Terraform

Kubernetes

+2

Power Reply GmbH & Co. KG

München, Germany

Remote

API

Java

Rust

Azure

+12

The Next Chapter

Amsterdam, Netherlands

Remote

Senior

C++

Unix

Python

Docker

+4