Phil Nash

Build RAG from Scratch

#1about 3 minutes

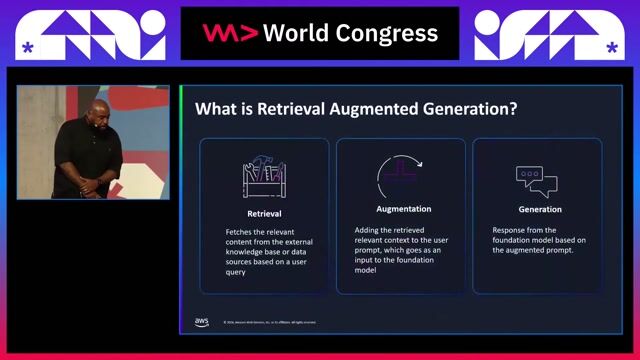

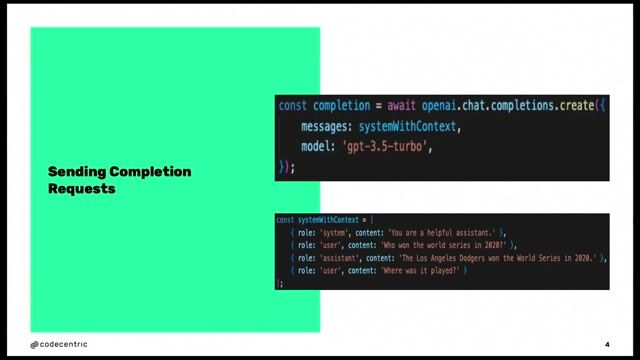

Why large language models need retrieval augmented generation

Large language models have knowledge cutoffs and lack access to private data, a problem solved by providing relevant context at query time using RAG.

#2about 1 minute

How similarity search and vector embeddings power RAG

RAG relies on similarity search, not keyword search, which captures meaning by converting text into numerical representations called vector embeddings.

#3about 6 minutes

Building a simple bag-of-words vectorizer from scratch

A basic vector embedding can be created by tokenizing text, building a vocabulary of unique words, and representing each document as a vector of word counts.

#4about 8 minutes

Comparing document vectors using cosine similarity

Cosine similarity measures the angle between two vectors to determine their semantic closeness by focusing on direction (meaning) rather than magnitude.

#5about 3 minutes

Understanding the limitations of a bag-of-words model

The simple bag-of-words model is sensitive to vocabulary, slow to scale, and fails to capture nuanced semantic meaning like word order or synonyms.

#6about 4 minutes

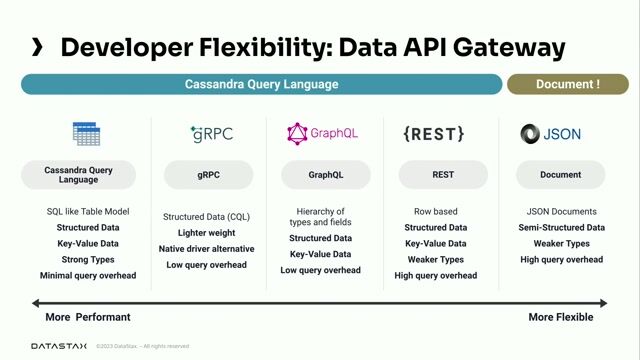

Using professional embedding models and vector databases

Production RAG systems use sophisticated embedding models and specialized vector databases for efficient, accurate, and scalable similarity search.

#7about 2 minutes

Exploring advanced RAG techniques and other applications

Beyond basic similarity search, techniques like ColBERT and knowledge graphs can improve retrieval accuracy, and vector search can power features like related content recommendations.

Related jobs

Jobs that call for the skills explored in this talk.

ROSEN Technology and Research Center GmbH

Osnabrück, Germany

Senior

TypeScript

React

+3

CARIAD

Berlin, Germany

Junior

Intermediate

Python

C++

+1

Matching moments

03:45 MIN

Understanding retrieval-augmented generation systems

AI Model Management Life Circles: ML Ops For Generative AI Models From Research to Deployment

02:42 MIN

Powering real-time AI with retrieval augmented generation

Scrape, Train, Predict: The Lifecycle of Data for AI Applications

02:53 MIN

Understanding Retrieval-Augmented Generation (RAG)

Graphs and RAGs Everywhere... But What Are They? - Andreas Kollegger - Neo4j

01:19 MIN

How retrieval-augmented generation (RAG) works

Make it simple, using generative AI to accelerate learning

05:31 MIN

Understanding retrieval-augmented generation (RAG)

Exploring LLMs across clouds

01:59 MIN

What is Retrieval Augmented Generation (RAG)?

Building Real-Time AI/ML Agents with Distributed Data using Apache Cassandra and Astra DB

01:49 MIN

Enhancing AI responses with retrieval augmented generation

Bringing the power of AI to your application.

04:10 MIN

A deep dive into retrieval-augmented generation

Lies, Damned Lies and Large Language Models

Featured Partners

Related Videos

26:25

26:25Building Blocks of RAG: From Understanding to Implementation

Ashish Sharma

21:17

21:17Carl Lapierre - Exploring Advanced Patterns in Retrieval-Augmented Generation

Carl Lapierre

29:11

29:11Large Language Models ❤️ Knowledge Graphs

Michael Hunger

26:54

26:54Make it simple, using generative AI to accelerate learning

Duan Lightfoot

28:20

28:20Building Real-Time AI/ML Agents with Distributed Data using Apache Cassandra and Astra DB

Dieter Flick

46:51

46:51Graphs and RAGs Everywhere... But What Are They? - Andreas Kollegger - Neo4j

24:42

24:42Martin O'Hanlon - Make LLMs make sense with GraphRAG

Martin O'Hanlon

31:12

31:12Using LLMs in your Product

Daniel Töws

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

The Rolewe

Charing Cross, United Kingdom

API

Python

Machine Learning

MedAscend

Killin, United Kingdom

Remote

£52K

Senior

API

React

Docker

+4

Robert Ragge GmbH

Senior

API

Python

Terraform

Kubernetes

A/B testing

+3

Pathway

Paris, France

Remote

€72-75K

GIT

Python

Unit Testing

+2

autonomous-teaming

München, Germany

Remote

API

React

Python

TypeScript