Paul Graham

Accelerating Python on GPUs

#1about 1 minute

The evolution of GPUs from graphics to AI computing

GPUs transitioned from rendering graphics to becoming essential for general-purpose parallel computing and accelerating the deep learning revolution.

#2about 2 minutes

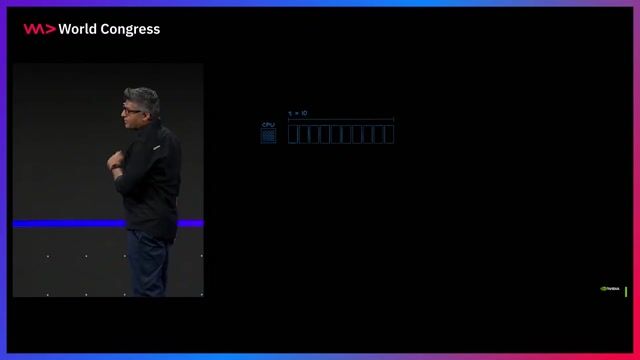

Why GPU acceleration surpasses traditional CPU performance

The plateauing of single-core CPU performance contrasts with the continued exponential growth of GPU parallel processing power, driving the adoption of accelerated computing.

#3about 2 minutes

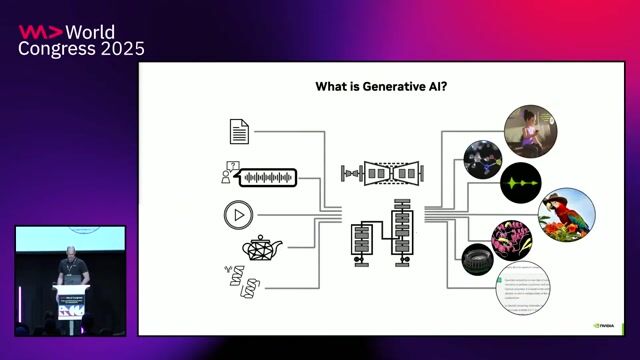

Understanding the CUDA software ecosystem stack

The CUDA platform provides a layered ecosystem, allowing developers to use high-level applications, libraries, or program GPUs directly depending on their needs.

#4about 3 minutes

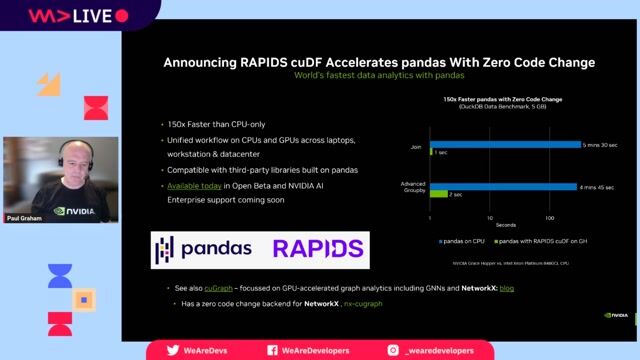

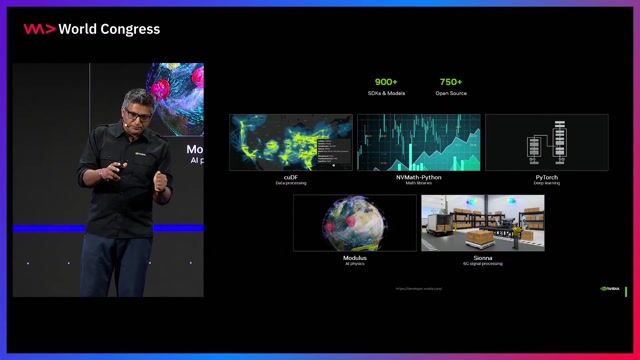

Using high-level frameworks like RAPIDS for data science

Frameworks like RAPIDS provide GPU-accelerated, API-compatible replacements for popular data science libraries like Pandas and Scikit-learn, often requiring no code changes.

#5about 2 minutes

Accelerating deep learning with cuDNN and Cutlass

The cuDNN library provides optimized deep learning primitives for frameworks like PyTorch, while Cutlass offers direct programming access to Tensor Cores for custom operations.

#6about 2 minutes

A spectrum of approaches for programming GPUs in Python

Developers can choose from a spectrum of GPU programming approaches in Python, ranging from simple drop-in libraries to directive-based compilers and direct API control.

#7about 2 minutes

Drop-in libraries like CuPy and cuNumeric for easy acceleration

Libraries like CuPy and cuNumeric offer NumPy-compatible APIs that enable GPU acceleration and multi-node scaling with just a single import statement change.

#8about 3 minutes

Gaining more control with the Numba JIT compiler

Numba acts as a just-in-time compiler that translates Python functions into optimized GPU code using simple decorators for either automatic vectorization or explicit kernel writing.

#9about 1 minute

Achieving maximum flexibility with PyCUDA and C kernels

PyCUDA provides the lowest-level access to the GPU from Python, allowing developers to write and execute raw CUDA C kernels for complete control over hardware features.

#10about 2 minutes

Profiling and debugging GPU-accelerated Python code

NVIDIA provides a full suite of Python-enabled developer tools for performance analysis, including Insight Systems for system-level profiling and Insight Compute for kernel-level optimization.

#11about 2 minutes

Accessing software, models, and training resources

NVIDIA offers extensive resources including the NGC catalog for containerized software, pre-trained models, and the Deep Learning Institute for self-paced training courses.

Related jobs

Jobs that call for the skills explored in this talk.

CARIAD

Berlin, Germany

Junior

Intermediate

Python

C++

+1

Matching moments

01:07 MIN

The evolution of GPU programming with Python

Accelerating Python on GPUs

05:12 MIN

Boosting Python performance with the Nvidia CUDA ecosystem

The weekly developer show: Boosting Python with CUDA, CSS Updates & Navigating New Tech Stacks

10:18 MIN

A progressive approach to programming GPUs in Python

Accelerating Python on GPUs

02:47 MIN

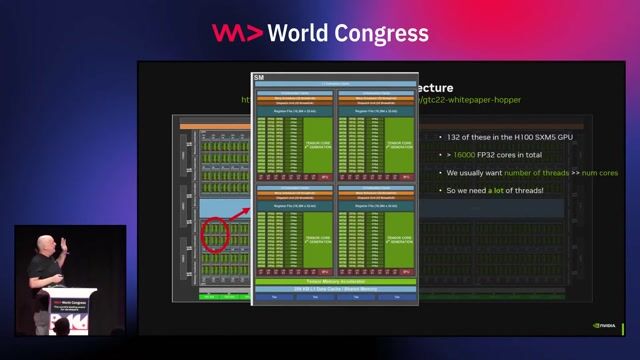

Understanding accelerated computing and GPU parallelism

WWC24 - Ankit Patel - Unlocking the Future Breakthrough Application Performance and Capabilities with NVIDIA

02:28 MIN

Navigating the CUDA Python software ecosystem

Accelerating Python on GPUs

01:33 MIN

A look at upcoming Python GPU programming tools

Accelerating Python on GPUs

04:05 MIN

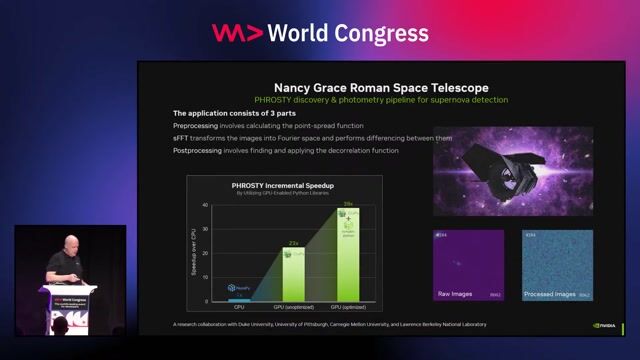

Using NVIDIA libraries to easily accelerate applications

WWC24 - Ankit Patel - Unlocking the Future Breakthrough Application Performance and Capabilities with NVIDIA

02:56 MIN

Using high-level frameworks like Rapids for acceleration

Accelerating Python on GPUs

Featured Partners

Related Videos

24:39

24:39Accelerating Python on GPUs

Paul Graham

59:43

59:43Accelerating Python on GPUs

Paul Graham

21:02

21:02CUDA in Python

Andy Terrel

22:07

22:07WWC24 - Ankit Patel - Unlocking the Future Breakthrough Application Performance and Capabilities with NVIDIA

Ankit Patel

29:52

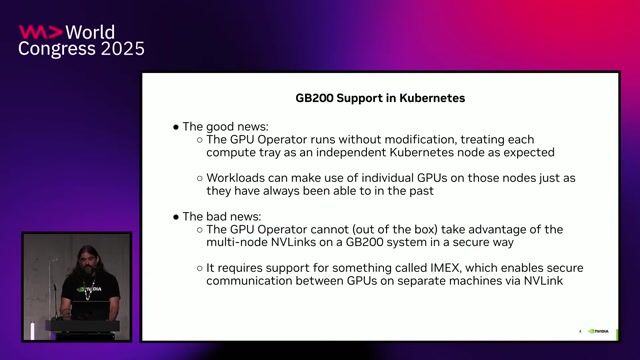

29:52Your Next AI Needs 10,000 GPUs. Now What?

Anshul Jindal & Martin Piercy

37:32

37:32Coffee with Developers - Stephen Jones - NVIDIA

Stephen Jones

25:29

25:29Python: Behind the Scenes

Diana Gastrin

32:19

32:19A Deep Dive on How To Leverage the NVIDIA GB200 for Ultra-Fast Training and Inference on Kubernetes

Kevin Klues

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Avantgarde Experts GmbH

München, Germany

Junior

C++

GIT

CMake

Linux

DevOps

+3

Nvidia

Central Milton Keynes, United Kingdom

£221K

Senior

C++

Python

Docker

Ansible

+4

Nvidia

Remote

Senior

C

Bash

Linux

Python

+2

IMS Nanofabrication GmbH

Vienna, Austria

€55K

GIT

Linux

Python

Docker

+4