Merrill Lutsky

Evaluating AI models for code comprehension

#1about 2 minutes

The challenge of reviewing exponentially growing AI-generated code

The rapid increase in AI-generated code creates a significant bottleneck in the software development lifecycle, particularly in the code review stage.

#2about 3 minutes

How AI code generation strains the developer outer loop

While AI accelerates code writing (the inner loop), it overwhelms the critical outer loop processes of testing, reviewing, and deploying code.

#3about 1 minute

Introducing Diamond, an AI agent for automated code review

Graphite's AI agent, Diamond, acts as an always-on senior engineer within GitHub to summarize, prioritize, and review every code change.

#4about 3 minutes

Using comment acceptance rate to measure AI review quality

The primary metric for a successful AI reviewer is the acceptance rate of its comments, as every high-signal comment should result in a code change.

#5about 1 minute

Why evaluations are the key lever for LLM performance

Unlike traditional machine learning, optimizing large language models relies heavily on a robust evaluation process rather than other levers like feature engineering.

#6about 2 minutes

A methodology for evaluating AI code comprehension models

Models are evaluated against a large dataset of pull requests using two core metrics: matched comment rate for recall and unmatched comment rate for noise.

#7about 3 minutes

A comparative analysis of GPT-4.0, Opus, and Gemini

A detailed comparison reveals that models like GPT-4.0 excel at precision while Gemini has the best recall, but no single model wins on all metrics.

#8about 2 minutes

Evaluating Sonnet models and the problem of AI noise

Testing reveals that Sonnet 4.0 generates the most noise, making it less suitable for high-signal code review compared to its predecessors.

#9about 2 minutes

Why Sonnet 3.7 offers the best balance for code review

Sonnet 3.7 is the chosen model because it provides the optimal blend of strong reasoning, high recall of important issues, and low generation of noisy comments.

#10about 3 minutes

The critical role of continuous evaluation for new models

The key to leveraging AI effectively is to constantly re-evaluate new models, as shown by preliminary tests on GR four which revealed significant performance gaps.

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

Eltemate

Amsterdam, Netherlands

Intermediate

Senior

TypeScript

Continuous Integration

+1

Matching moments

06:05 MIN

Reviewing new developer tools and comparing AI image models

WeAreDevelopers LIVE – Building on Algorand: Real Projects and Developer Tools

02:01 MIN

Comparing LLM performance and planning next steps

Build Your First AI Assistant in 30 Minutes: No Code Workshop

02:27 MIN

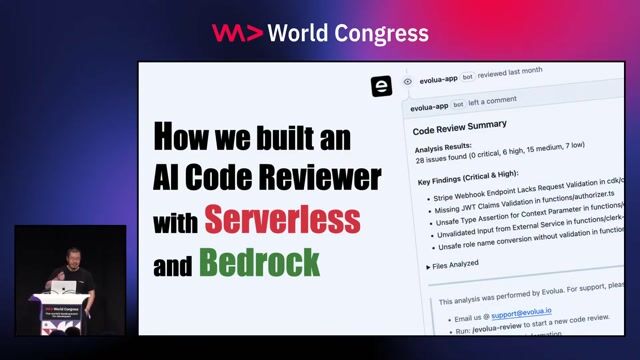

An overview of an AI-powered code reviewer

How we built an AI-powered code reviewer in 80 hours

05:28 MIN

The limitations and potential of AI models

Coffee with Developers - Cassidy Williams -

02:24 MIN

Prototyping a basic AI code review agent

The Limits of Prompting: ArchitectingTrustworthy Coding Agents

04:17 MIN

Analyzing the developer productivity funnel for GenAI tools

The State of GenAI & Machine Learning in 2025

04:02 MIN

Q&A on AI limitations and practical application

How to become an AI toolsmith

07:06 MIN

Critically evaluating AI-powered performance analysis tools

AI is an Electric Bike for the Brain - Stoyan Stefanov

Featured Partners

Related Videos

28:07

28:07How we built an AI-powered code reviewer in 80 hours

Yan Cui

31:39

31:39Livecoding with AI

Rainer Stropek

24:05

24:05The Limits of Prompting: ArchitectingTrustworthy Coding Agents

Nimrod Kor

15:26

15:26New AI-Centric SDLC: Rethinking Software Development with Knowledge Graphs

Gregor Schumacher, Sujay Joshy & Marcel Gocke

27:07

27:07Bringing AI Model Testing and Prompt Management to Your Codebase with GitHub Models

Sandra Ahlgrimm & Kevin Lewis

25:17

25:17AI: Superhero or Supervillain? How and Why with Scott Hanselman

Scott Hanselman

50:12

50:12Google Gemini: Open Source and Deep Thinking Models - Sam Witteveen

Sam Witteveen

25:34

25:34Engineering Productivity: Cutting Through the AI Noise

Himanshu Vasishth, Mindaugas Mozūras, Jackie Brosamer & Lukas Pfeiffer

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Imec

Azure

Python

PyTorch

TensorFlow

Computer Vision

+1

Amazon.com, Inc

Shoreham-by-Sea, United Kingdom

XML

HTML

JSON

Python

Data analysis

+1

MedAscend

Killin, United Kingdom

Remote

£52K

Senior

API

React

Docker

+4

Graphcore

Cambridge, United Kingdom

Senior

C++

Python

PyTorch

Kubernetes

Machine Learning