Nimrod Kor

The Limits of Prompting: ArchitectingTrustworthy Coding Agents

#1about 2 minutes

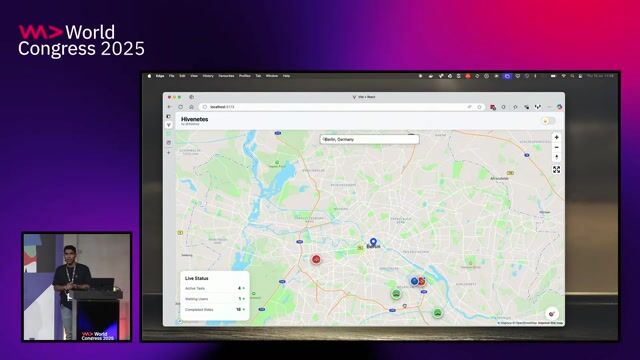

Prototyping a basic AI code review agent

A simple prototype using a GitHub webhook and a single LLM call reveals the potential for understanding code semantics beyond static analysis.

#2about 2 minutes

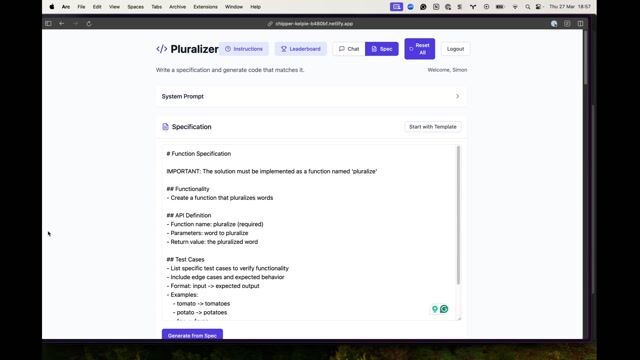

Iteratively improving prompts to handle edge cases

Simple prompts fail to consider developer comments or model knowledge cutoffs, requiring more detailed instructions to improve accuracy.

#3about 5 minutes

Establishing a robust benchmarking process for agents

A reliable benchmarking pipeline uses a large dataset, concurrent execution, and an LLM-as-a-judge (LLJ) to measure and track performance improvements.

#4about 2 minutes

Decomposing large tasks into specialized agents

To combat inconsistency and hallucinations, a single large task like code review is broken down into multiple smaller, specialized agents.

#5about 6 minutes

Leveraging codebase context for deeper insights

Moving beyond prompts, providing codebase context via vector similarity (RAG) and module dependency graphs (AST) unlocks high-quality, human-like feedback.

#6about 3 minutes

Introducing Awesome Reviewers for community standards

Awesome Reviewers is a collection of prompts derived from open-source projects that can be used to enforce team-specific coding standards.

#7about 1 minute

Key takeaways for building reliable LLM agents

The path to a reliable agent involves starting with a proof-of-concept, benchmarking rigorously, using prompt engineering for quick fixes, and investing in deep context.

Related jobs

Jobs that call for the skills explored in this talk.

ROSEN Technology and Research Center GmbH

Osnabrück, Germany

Senior

TypeScript

React

+3

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

Matching moments

03:29 MIN

The evolution from prompt engineering to context engineering

Engineering Productivity: Cutting Through the AI Noise

04:43 MIN

The limitations and frustrations of coding with LLMs

WAD Live 22/01/2025: Exploring AI, Web Development, and Accessibility in Tech with Stefan Judis

02:27 MIN

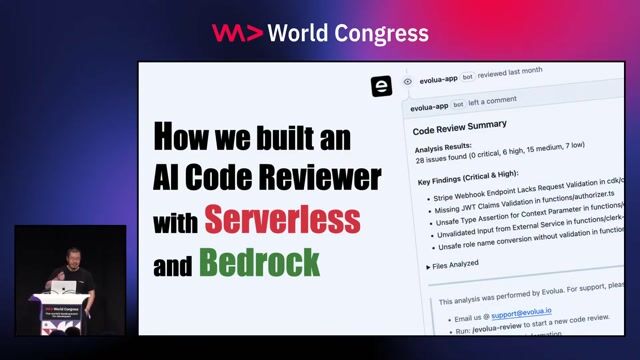

An overview of an AI-powered code reviewer

How we built an AI-powered code reviewer in 80 hours

03:31 MIN

Effective prompting and defensive coding for LLMs

Lessons Learned Building a GenAI Powered App

02:33 MIN

Why you need to prompt large language models like a child

Developers vs Scammers, Bad Design, AI is Pointless, AJAX is 20 and more - The Best of LIVE 2025 - Part 1

02:58 MIN

Shifting from traditional code to AI-powered logic

WWC24 - Ankit Patel - Unlocking the Future Breakthrough Application Performance and Capabilities with NVIDIA

02:21 MIN

The danger of over-engineering with LLMs

Event-Driven Architecture: Breaking Conversational Barriers with Distributed AI Agents

04:56 MIN

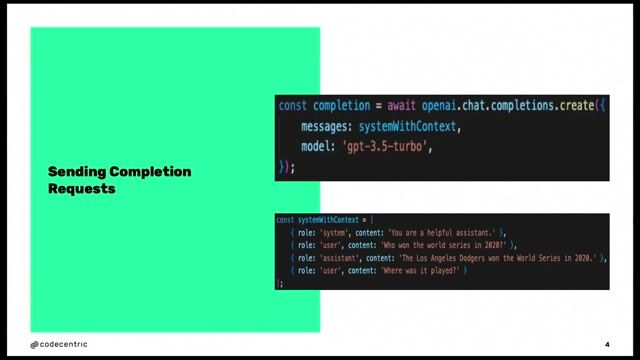

Understanding when prompting fails and how LLMs process requests

The Power of Prompting with AI Native Development - Simon Maple

Featured Partners

Related Videos

28:07

28:07How we built an AI-powered code reviewer in 80 hours

Yan Cui

26:30

26:30Three years of putting LLMs into Software - Lessons learned

Simon A.T. Jiménez

31:12

31:12Using LLMs in your Product

Daniel Töws

32:26

32:26Bringing the power of AI to your application.

Krzysztof Cieślak

30:48

30:48The AI Agent Path to Prod: Building for Reliability

Max Tkacz

33:28

33:28Prompt Engineering - an Art, a Science, or your next Job Title?

Maxim Salnikov

25:32

25:32Beyond Prompting: Building Scalable AI with Multi-Agent Systems and MCP

Viktoria Semaan

23:24

23:24Prompt Injection, Poisoning & More: The Dark Side of LLMs

Keno Dreßel

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

TheVentury FlexCo

Vienna, Austria

€47-51K

Intermediate

Senior

AI Frameworks

AI-assisted coding tools

Robert Ragge GmbH

Senior

API

Python

Terraform

Kubernetes

A/B testing

+3

Mindrift

Remote

£41K

Junior

JSON

Python

Data analysis

+1

In Space BV

Eindhoven, Netherlands

Remote

€5-7K

Senior

Continuous Integration

Logiq's Architecture Practice

Bristol, United Kingdom

ETL

Azure

DevOps

Python

Gitlab

+5