Simon A.T. Jiménez

Three years of putting LLMs into Software - Lessons learned

#1about 4 minutes

Understanding the fundamental nature of LLMs

LLMs are unreliable pattern matchers that appear intelligent but lack true understanding, requiring developers to manage context and anticipate failures.

#2about 4 minutes

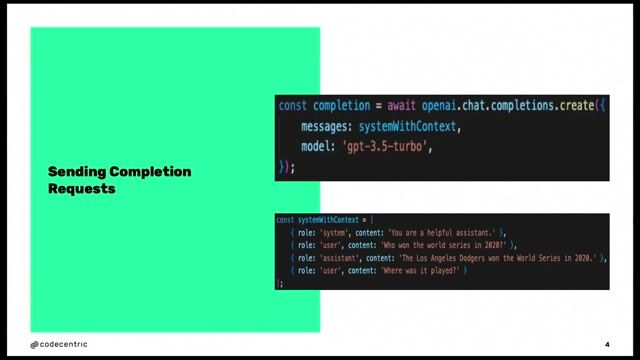

Controlling LLM output with API parameters

API parameters like temperature and top_p allow for control over the determinism and creativity of LLM responses by manipulating token selection probabilities.

#3about 7 minutes

Viewing LLMs as a new kind of API

LLMs should be treated as a new type of API for text manipulation, not as intelligent agents, because they are advanced pattern matchers with significant limitations.

#4about 5 minutes

Implementing practical LLM use cases in software

LLMs can be used for tasks like audio transcription, image analysis for OCR, and text reformulation by providing clear instructions and examples in the prompt.

#5about 4 minutes

Navigating legal compliance and data privacy

Using paid APIs with data privacy contracts, implementing human-in-the-loop workflows, and understanding the European AI Act are crucial for legal compliance.

#6about 2 minutes

Understanding the security risks of AI integrations

Integrating LLMs with external APIs or internal data creates significant security risks like prompt injection, requiring careful control over the AI's permissions and actions.

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

Eltemate

Amsterdam, Netherlands

Intermediate

Senior

TypeScript

Continuous Integration

+1

Matching moments

01:47 MIN

Three pillars for integrating LLMs in products

Using LLMs in your Product

04:47 MIN

Why natural voice AI has been so difficult

Hello JARVIS - Building Voice Interfaces for Your LLMS

05:18 MIN

Addressing the core challenges of large language models

Accelerating GenAI Development: Harnessing Astra DB Vector Store and Langflow for LLM-Powered Apps

02:55 MIN

Addressing the key challenges of large language models

Large Language Models ❤️ Knowledge Graphs

06:55 MIN

Demonstrating LLM hallucinations with tricky questions

Give Your LLMs a Left Brain

02:33 MIN

Why you need to prompt large language models like a child

Developers vs Scammers, Bad Design, AI is Pointless, AJAX is 20 and more - The Best of LIVE 2025 - Part 1

02:35 MIN

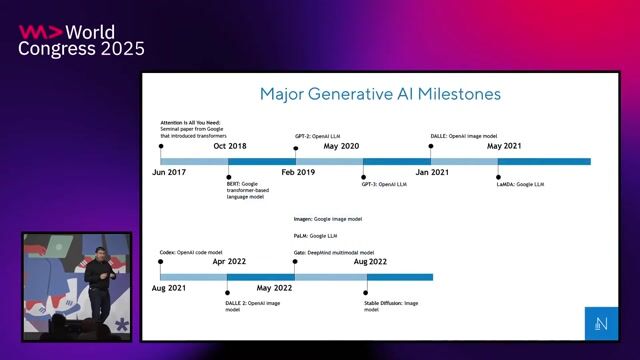

The rapid evolution and adoption of LLMs

Building Blocks of RAG: From Understanding to Implementation

07:57 MIN

Demystifying AI by exploring human language processing

WeAreDevelopers LIVE – PHP Is Alive and Kicking and More

Featured Partners

Related Videos

31:12

31:12Using LLMs in your Product

Daniel Töws

27:11

27:11Inside the Mind of an LLM

Emanuele Fabbiani

32:26

32:26Bringing the power of AI to your application.

Krzysztof Cieślak

28:13

28:13Hello JARVIS - Building Voice Interfaces for Your LLMS

Nathaniel Okenwa

24:11

24:11You are not my model anymore - understanding LLM model behavior

Andreas Erben

25:17

25:17AI: Superhero or Supervillain? How and Why with Scott Hanselman

Scott Hanselman

30:04

30:04Self-Hosted LLMs: From Zero to Inference

Roberto Carratalá & Cedric Clyburn

28:38

28:38Exploring LLMs across clouds

Tomislav Tipurić

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

MedAscend

Killin, United Kingdom

Remote

£52K

Senior

API

React

Docker

+4

Odido

The Hague, Netherlands

Intermediate

API

Azure

Flask

Python

Docker

+3

In Space BV

Eindhoven, Netherlands

Remote

€5-7K

Senior

Continuous Integration

Accenture

Municipality of Madrid, Spain

Remote

Senior

GIT

DevOps

Python

Jenkins

+3

Hyperproof

Municipality of Madrid, Spain

€45K

Machine Learning

![Technical Engineering Director - LLMs / Machine Learning / NLP / Natural Language Processing / []](https://wearedevelopers.imgix.net/public/default-job-listing-cover.png?w=400&ar=3.55&fit=crop&crop=entropy&auto=compress,format)

European Tech Recruit

Municipality of San Sebastian, Spain

€70-90K

Python

Machine Learning

Natural Language Processing