Andreas Erben

You are not my model anymore - understanding LLM model behavior

#1about 2 minutes

Unexpected LLM behavior from hidden platform updates

A practical demonstration shows how a cloud provider's content filter update can unexpectedly block access to documents, causing application failures.

#2about 3 minutes

How LLMs generate text and learn behavior

Large language models use a transformer architecture to predict the next token based on probability, with instruction tuning and alignment shaping their final behavior.

#3about 2 minutes

The opaque and complex stack of modern LLM services

Major LLM providers operate in secrecy, and the full technology stack from model weights to the API is complex, leaving developers with limited visibility and control.

#4about 3 minutes

Managing risks from provider filters and short API lifecycles

Cloud provider content filters can change without notice, creating vulnerabilities, while the short lifecycle of model APIs requires constant adaptation.

#5about 4 minutes

Understanding LLMs as alien minds with fragile alignment

LLMs are conceptually like alien intelligences with a fragile, human-like alignment layer that can be bypassed by jailbreaks exploiting internal model circuits.

#6about 2 minutes

How model personalities and behaviors shift between versions

Different LLM versions exhibit distinct behaviors and may ignore system prompts, as shown by a comparison between GPT-4 and a newer reasoning model.

#7about 3 minutes

Using evaluations to systematically test model behavior

Systematically test model behavior using evaluations, which can be automated by generating prompt variations or using pre-built cloud and open-source frameworks.

#8about 4 minutes

Using prompt engineering to mitigate model drift

Mitigate model behavior drift by using advanced prompt engineering techniques like forcing reasoning, providing few-shot examples, and being highly explicit in instructions.

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

ROSEN Technology and Research Center GmbH

Osnabrück, Germany

Senior

TypeScript

React

+3

Matching moments

04:34 MIN

Analyzing the risks and architecture of current AI models

Opening Keynote by Sir Tim Berners-Lee

02:58 MIN

Shifting from traditional code to AI-powered logic

WWC24 - Ankit Patel - Unlocking the Future Breakthrough Application Performance and Capabilities with NVIDIA

05:18 MIN

Addressing the core challenges of large language models

Accelerating GenAI Development: Harnessing Astra DB Vector Store and Langflow for LLM-Powered Apps

02:19 MIN

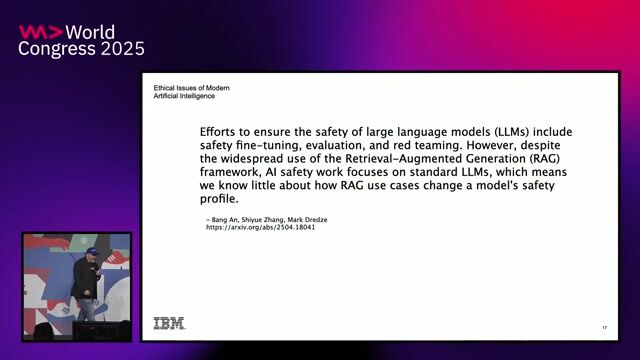

The ethical risks of outdated and insecure AI models

AI & Ethics

05:39 MIN

Understanding the GenAI lifecycle and its operational challenges

LLMOps-driven fine-tuning, evaluation, and inference with NVIDIA NIM & NeMo Microservices

02:55 MIN

Addressing the key challenges of large language models

Large Language Models ❤️ Knowledge Graphs

03:18 MIN

The challenge of moving AI from demo to production

What’s New with Google Gemini?

03:43 MIN

AI privacy concerns and prompt engineering

Coffee with Developers - Cassidy Williams -

Featured Partners

Related Videos

26:30

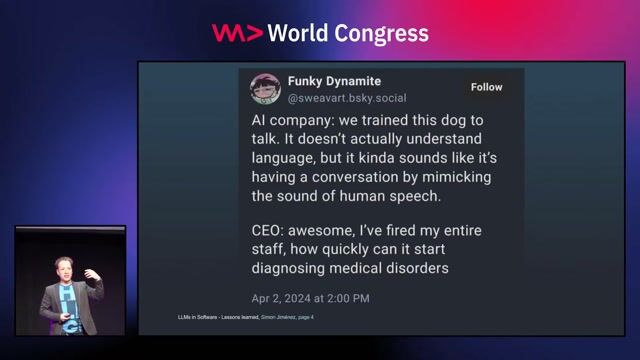

26:30Three years of putting LLMs into Software - Lessons learned

Simon A.T. Jiménez

29:00

29:00Beyond the Hype: Building Trustworthy and Reliable LLM Applications with Guardrails

Alex Soto

25:17

25:17AI: Superhero or Supervillain? How and Why with Scott Hanselman

Scott Hanselman

35:16

35:16How AI Models Get Smarter

Ankit Patel

23:24

23:24Prompt Injection, Poisoning & More: The Dark Side of LLMs

Keno Dreßel

27:11

27:11Inside the Mind of an LLM

Emanuele Fabbiani

25:27

25:27From Traction to Production: Maturing your GenAIOps step by step

Maxim Salnikov

32:26

32:26Bringing the power of AI to your application.

Krzysztof Cieślak

Related Articles

View all articles.gif?w=240&auto=compress,format)

From learning to earning

Jobs that call for the skills explored in this talk.

MedAscend

Killin, United Kingdom

Remote

£52K

Senior

API

React

Docker

+4

LEVY PROFESSIONALS

Amsterdam, Netherlands

Senior

Azure

DevOps

Docker

Terraform

Kubernetes

Odido

The Hague, Netherlands

Intermediate

API

Azure

Flask

Python

Docker

+3

Accenture

Municipality of Madrid, Spain

Remote

Senior

GIT

DevOps

Python

Jenkins

+3

Ernst & Young GmbH

Berlin, Germany

DevOps

Machine Learning

Robert Ragge GmbH

Senior

API

Python

Terraform

Kubernetes

A/B testing

+3