Max Tkacz

The AI Agent Path to Prod: Building for Reliability

#1about 4 minutes

Why AI agents fail in production environments

AI agents often fail in production because the probabilistic nature of LLMs conflicts with the need for reliability at scale.

#2about 5 minutes

Scoping an AI agent for a specific business problem

Start by identifying a low-risk, high-impact task, like automating free trial extensions, to establish a viable solution scope.

#3about 3 minutes

Walking through the naive V1 customer support agent

The initial agent uses an LLM with tools to fetch user data and extend trials, but its reliability is unknown without testing.

#4about 4 minutes

Using evaluations to test the happy path case

Evaluations are introduced as a testing framework to run the agent against specific test cases, revealing inconsistencies even in the happy path.

#5about 4 minutes

Improving agent consistency with prompt engineering

By adding explicit rules and few-shot examples to the system prompt, the agent's tool usage and response quality become more consistent.

#6about 5 minutes

Testing for prompt injection and other edge cases

A new evaluation case for prompt injection reveals a security flaw, which is fixed by adding specific security rules to the system prompt.

#7about 6 minutes

Applying production guardrails beyond evaluations

Beyond evals, production readiness requires adding human-in-the-loop processes, custom error handling, rate limiting, and model redundancy.

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

Eltemate

Amsterdam, Netherlands

Intermediate

Senior

TypeScript

Continuous Integration

+1

Matching moments

03:18 MIN

The challenge of moving AI from demo to production

What’s New with Google Gemini?

02:05 MIN

Moving agentic AI from proof of concept to production

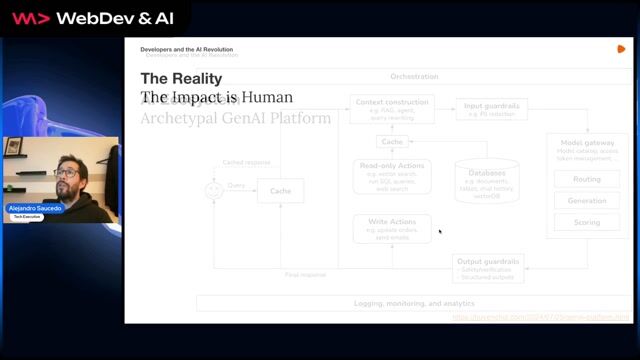

Building Blocks for Agentic Solutions in your Enterprise

04:51 MIN

Overcoming the challenges of productionizing AI models

Navigating the AI Revolution in Software Development

01:06 MIN

Key takeaways for building reliable LLM agents

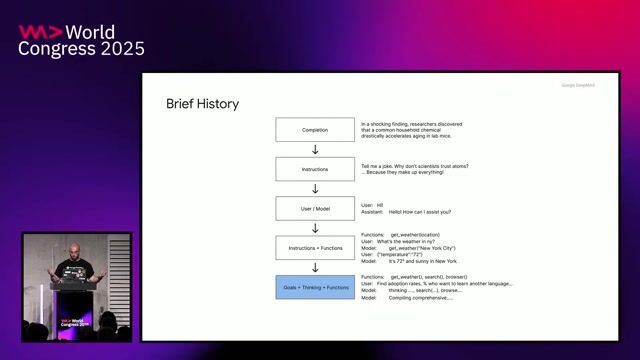

The Limits of Prompting: ArchitectingTrustworthy Coding Agents

05:19 MIN

The challenge of building production-ready AI applications

One AI API to Power Them All

02:17 MIN

The promise and pitfalls of implementing agentic AI

Rethinking Customer Experience in the Age of AI

06:44 MIN

Live demonstration of deploying Azure resources from a prompt

Infrastructure as Prompts: Creating Azure Infrastructure with AI Agents

03:31 MIN

Previewing the "AI or knockout" conference talk

From Learning to Leading: Why HR Needs a ChatGPT License

Featured Partners

Related Videos

39:11

39:11Agents for the Sake of Happiness

Thomas Dohmke

24:22

24:22Beyond Chatbots: How to build Agentic AI systems

Philipp Schmid

25:32

25:32Beyond Prompting: Building Scalable AI with Multi-Agent Systems and MCP

Viktoria Semaan

29:03

29:03On a Secret Mission: Developing AI Agents

Jörg Neumann

24:05

24:05The Limits of Prompting: ArchitectingTrustworthy Coding Agents

Nimrod Kor

26:47

26:47The State of GenAI & Machine Learning in 2025

Alejandro Saucedo

26:30

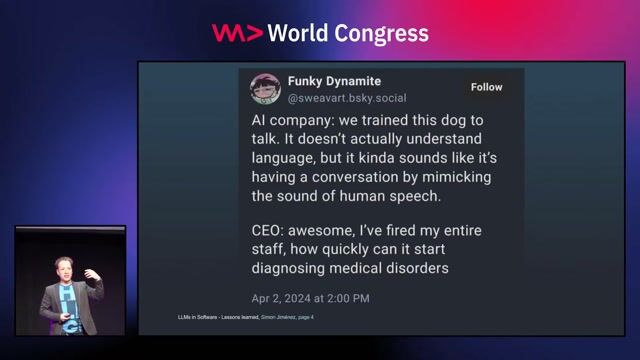

26:30Three years of putting LLMs into Software - Lessons learned

Simon A.T. Jiménez

24:11

24:11You are not my model anymore - understanding LLM model behavior

Andreas Erben

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Profitero

Winnersh Civil Parish, United Kingdom

Senior

API

GIT

REST

Python

Continuous Integration

autonomous-teaming

München, Germany

Remote

API

React

Python

TypeScript

Tyne

Newcastle upon Tyne, United Kingdom

Senior

Python

Data analysis

Machine Learning

ailylabs

Barcelona, Spain

Intermediate

Azure

Python

Amazon Web Services (AWS)

T-Maxx International