Marek Suppa

Serverless deployment of (large) NLP models

#1about 9 minutes

Exploring practical NLP applications at Slido

Several NLP-powered features are used to enhance user experience, including keyphrase extraction, sentiment analysis, and similar question detection.

#2about 4 minutes

Choosing serverless for ML model deployment

Serverless was chosen for its ease of deployment and minimal maintenance, but it introduces challenges like cold starts and strict package size limits.

#3about 8 minutes

Shrinking large BERT models for sentiment analysis

Knowledge distillation is used to train smaller, faster models like TinyBERT from a large, fine-tuned BERT base model without significant performance loss.

#4about 8 minutes

Building an efficient similar question detection model

Sentence-BERT (SBERT) provides an efficient alternative to standard BERT for semantic similarity, and knowledge distillation helps create smaller, deployable versions.

#5about 3 minutes

Using ONNX Runtime for lightweight model inference

The large PyTorch library is replaced with the much smaller ONNX Runtime to fit the model and its dependencies within AWS Lambda's package size limits.

#6about 3 minutes

Analyzing serverless ML performance and cost-effectiveness

Increasing allocated RAM for a Lambda function improves inference speed, potentially making serverless more cost-effective than a dedicated server for uneven workloads.

#7about 3 minutes

Key takeaways for deploying NLP models serverlessly

Successful serverless deployment of large NLP models requires aggressive model size reduction, lightweight inference libraries, and an understanding of the platform's limitations.

Related jobs

Jobs that call for the skills explored in this talk.

Featured Partners

Related Videos

34:21

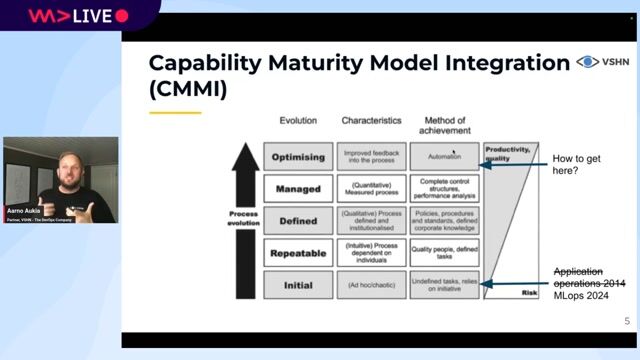

34:21DevOps for AI: running LLMs in production with Kubernetes and KubeFlow

Aarno Aukia

39:58

39:58Leverage Cloud Computing Benefits with Serverless Multi-Cloud ML

Linda Mohamed

52:37

52:37Multilingual NLP pipeline up and running from scratch

Kateryna Hrytsaienko

27:23

27:23From ML to LLM: On-device AI in the Browser

Nico Martin

47:19

47:19The state of MLOps - machine learning in production at enterprise scale

Bas Geerdink

41:45

41:45From Traction to Production: Maturing your LLMOps step by step

Maxim Salnikov

47:28

47:28What do language models really learn

Tanmay Bakshi

46:31

46:31Optimizing your AI/ML workloads for sustainability

Sohan Maheshwar

From learning to earning

Jobs that call for the skills explored in this talk.

Machine Learning Engineer

Speechmatics

Charing Cross, United Kingdom

Remote

€39K

Machine Learning

Speech Recognition

AI/ Machine Learning Engineer NLP / LLM - Contract

Involved Solutions LTD.

Manchester, United Kingdom

Remote

€117-130K

Machine Learning

Natural Language Processing

ML/DevOps Engineer at dynamic AI/ Computer Vision company

Nomitri

Berlin, Germany

C++

Bash

Azure

DevOps

Python

+12

Machine Learning (IA/ML) + DevOps (MLOps)

Alten

Municipality of Madrid, Spain

Remote

Java

DevOps

Python

Kubernetes

+3

Security-by-Design for Trustworthy Machine Learning Pipelines

Association Bernard Gregory

Machine Learning

Continuous Delivery

Senior DevOps Engineer - Machine Learning

Lonza, Inc.

Cambridge, United Kingdom

Senior

GIT

Azure

Keras

DevOps

Python

+4

Machine Learning Evangelist @AI Startup

Lightly

Zürich, Switzerland

Intermediate

Python

PyTorch

TensorFlow

Computer Vision

Machine Learning