Tanmay Bakshi

What do language models really learn

#1about 7 minutes

The fundamental challenge of modeling natural language

Language models aim to create intuitive human-computer interfaces, but this is difficult because language syntax doesn't fully capture semantic meaning.

#2about 3 minutes

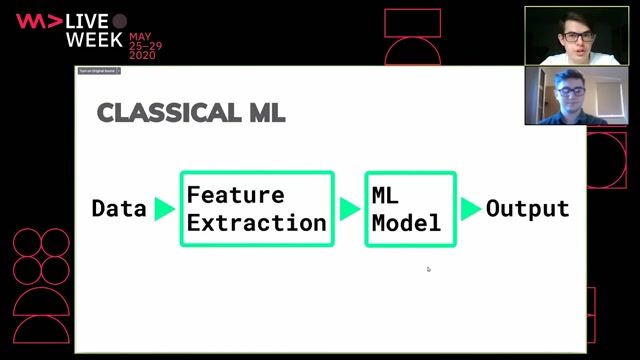

How deep learning models learn by transforming data

Deep learning works by performing a series of transformations on input data to warp its vector space until it becomes linearly separable.

#3about 3 minutes

Why the training objective is key to model behavior

The training objective, or incentive, dictates exactly what a model learns and can lead to unintended outcomes if not designed carefully.

#4about 8 minutes

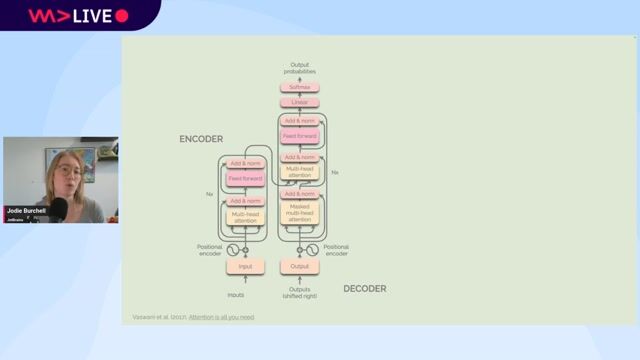

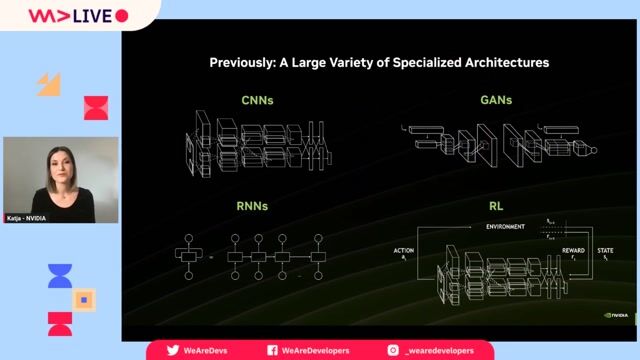

From Word2Vec and LSTMs to modern transformers

The evolution from slow, non-contextual models like LSTMs to the parallel and deeply contextual transformer architecture solved major NLP challenges.

#5about 7 minutes

A practical demo of a character-level BERT model

A scaled-down, character-level transformer model demonstrates the 'fill in the blank' pre-training task by predicting masked characters in artist names.

#6about 2 minutes

What language models implicitly learn about language structure

By analyzing a model's internal weights, we can see it learns phonetic relationships and syntactic structures without ever being explicitly trained on them.

#7about 7 minutes

Why current generative models don't truly 'write'

Generative models like GPT are excellent at predicting the next word based on statistical patterns but lack the underlying thought process required for true, creative writing.

#8about 4 minutes

Exploring the future with Blank Language Models

Blank Language Models (BLM) offer a new training approach by filling in text in any order, forcing the model to consider both past and future context.

#9about 3 minutes

The need for better tooling to accelerate ML research

The complexity of implementing novel architectures like BLMs highlights the need for better infrastructure and compiled languages like Swift for TensorFlow to speed up innovation.

Related jobs

Jobs that call for the skills explored in this talk.

Picnic Technologies B.V.

Amsterdam, Netherlands

Intermediate

Senior

Python

Structured Query Language (SQL)

+1

Wilken GmbH

Ulm, Germany

Senior

Kubernetes

AI Frameworks

+3

Matching moments

07:44 MIN

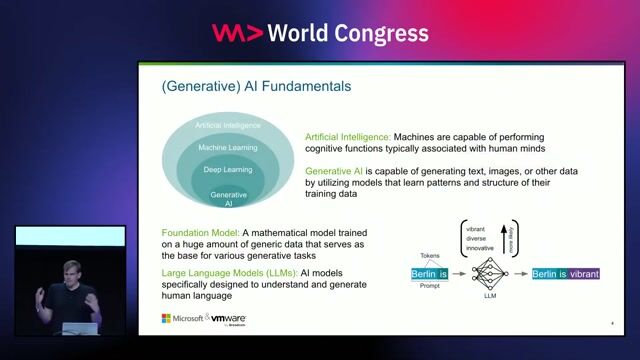

Defining key GenAI concepts like GPT and LLMs

Enter the Brave New World of GenAI with Vector Search

01:24 MIN

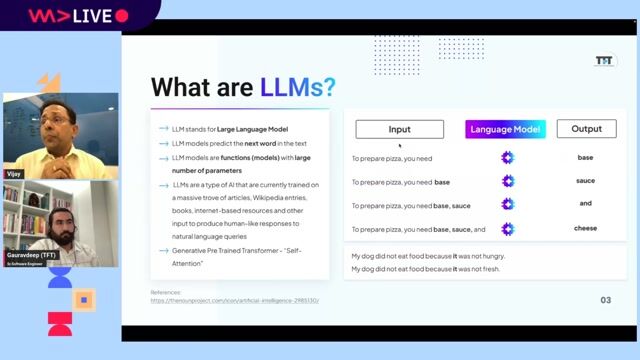

Understanding the fundamentals of large language models

Building Blocks of RAG: From Understanding to Implementation

02:26 MIN

Understanding the core capabilities of large language models

Data Privacy in LLMs: Challenges and Best Practices

04:05 MIN

Understanding the basics of large language models

Bringing the power of AI to your application.

02:00 MIN

Understanding the fundamentals of generative AI for developers

Java Meets AI: Empowering Spring Developers to Build Intelligent Apps

03:01 MIN

The evolution of NLP from early models to modern LLMs

Harry Potter and the Elastic Semantic Search

03:30 MIN

Using large language models for voice-driven development

Speak, Code, Deploy: Transforming Developer Experience with Voice Commands

03:42 MIN

Using large language models as a learning tool

Google Gemini: Open Source and Deep Thinking Models - Sam Witteveen

Featured Partners

Related Videos

58:00

58:00Creating Industry ready solutions with LLM Models

Vijay Krishan Gupta & Gauravdeep Singh Lotey

35:16

35:16How AI Models Get Smarter

Ankit Patel

29:40

29:40Lies, Damned Lies and Large Language Models

Jodie Burchell

23:50

23:50Data Privacy in LLMs: Challenges and Best Practices

Aditi Godbole

56:55

56:55Multimodal Generative AI Demystified

Ekaterina Sirazitdinova

25:17

25:17AI: Superhero or Supervillain? How and Why with Scott Hanselman

Scott Hanselman

32:54

32:54The pitfalls of Deep Learning - When Neural Networks are not the solution

Adrian Spataru & Bohdan Andrusyak

52:37

52:37Multilingual NLP pipeline up and running from scratch

Kateryna Hrytsaienko

Related Articles

View all articles.png?w=240&auto=compress,format)

.png?w=240&auto=compress,format)

From learning to earning

Jobs that call for the skills explored in this talk.

Xablu

Hengelo, Netherlands

Intermediate

.NET

Python

PyTorch

Blockchain

TensorFlow

+3

Apple Inc.

Cambridge, United Kingdom

C++

Java

Bash

Perl

Python

+4

Hotel Res Bot UG UG (haftungsbeschränkt)

Köln, Germany

Remote

€50-85K

API

Azure

Python

+5

Apple

Zürich, Switzerland

Python

PyTorch

TensorFlow

Machine Learning

Natural Language Processing

Luminance Technologies

Cambridge, United Kingdom

Python

PyTorch

TensorFlow

Computer Vision

Machine Learning

+1

Paris-based

Paris, France

Python

Docker

TensorFlow

Kubernetes

Computer Vision

+2

Language Services Ltd

Glasgow, United Kingdom

Remote

£75-100K

Senior

Machine Learning

Microsoft Dynamics