Patrick Koss

Unveiling the Magic: Scaling Large Language Models to Serve Millions

#1about 3 minutes

Understanding the benefits of self-hosting large language models

Self-hosting LLMs provides greater control over data privacy, compliance, cost, and vendor lock-in compared to using third-party services.

#2about 4 minutes

Architectural overview for a scalable LLM serving platform

A scalable LLM service requires key components for model acquisition, inference, storage, billing, security, and request routing.

#3about 7 minutes

Choosing an inference engine and model storage strategy

Using network file storage (NFS) is crucial for reducing startup times and enabling fast horizontal scaling when deploying new model instances.

#4about 5 minutes

Building an efficient token-based billing system

Aggregate token usage with tools like Redis before sending data to a payment provider to manage rate limits and improve system efficiency.

#5about 3 minutes

Implementing robust rate limiting for shared LLM systems

Prevent system abuse by implementing both request-based and token-based rate limiting, using estimations for output tokens to protect shared resources.

#6about 3 minutes

Selecting the right authentication and authorization strategy

Bearer tokens offer a flexible solution for managing authentication and fine-grained authorization, such as restricting access to specific models.

#7about 2 minutes

Scaling inference with Kubernetes and smart routing

Use tools like KServe or Knative on Kubernetes for intelligent autoscaling and canary deployments based on custom metrics like queue size.

#8about 3 minutes

Summary of best practices for scalable LLM deployment

Key strategies for success include robust rate limiting, modular design, continuous benchmarking, and using canary deployments for safe production testing.

Related jobs

Jobs that call for the skills explored in this talk.

Featured Partners

Related Videos

30:04

30:04Self-Hosted LLMs: From Zero to Inference

Roberto Carratalá, Cedric Clyburn

35:16

35:16How AI Models Get Smarter

Ankit Patel

26:30

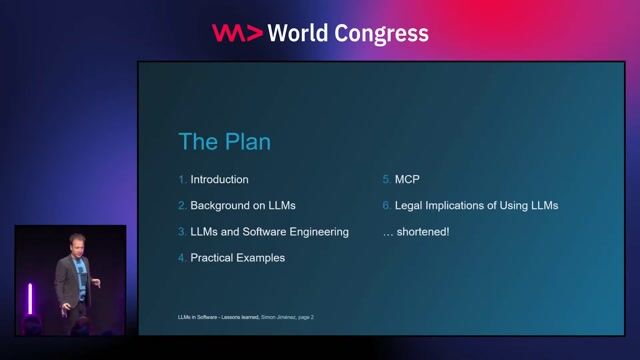

26:30Three years of putting LLMs into Software - Lessons learned

Simon A.T. Jiménez

31:12

31:12Using LLMs in your Product

Daniel Töws

27:11

27:11Inside the Mind of an LLM

Emanuele Fabbiani

42:26

42:26How to Avoid LLM Pitfalls - Mete Atamel and Guillaume Laforge

Meta Atamel, Guillaume Laforge

29:52

29:52Your Next AI Needs 10,000 GPUs. Now What?

Anshul Jindal, Martin Piercy

28:38

28:38Exploring LLMs across clouds

Tomislav Tipurić

From learning to earning

Jobs that call for the skills explored in this talk.

Senior Backend Engineer – AI Integration (m/w/x)

chatlyn GmbH

Vienna, Austria

Senior

JavaScript

AI-assisted coding tools

Security-by-Design for Trustworthy Machine Learning Pipelines

Association Bernard Gregory

Machine Learning

Continuous Delivery

Machine Learning Engineer

Speechmatics

Charing Cross, United Kingdom

Remote

€39K

Machine Learning

Speech Recognition

AI/ML Team Lead - Generative AI (LLMs, AWS)

Provectus

Canton de Saint-Mihiel, France

Remote

€96K

Senior

Python

PyTorch

TensorFlow

+4

Data Engineer - Machine Learning | Fraud & Abuse

DeepL

Charing Cross, United Kingdom

Remote

€40K

.NET

Python

Machine Learning