Matthias Niehoff

Enjoying SQL data pipelines with dbt

#1about 1 minute

The challenge of managing traditional SQL data pipelines

Traditional data pipelines often rely on unstructured Python glue code and notebooks, making them difficult to maintain and extend.

#2about 4 minutes

Introducing dbt for structured SQL data transformations

dbt is a command-line tool that brings software engineering principles to the transformation layer of ELT, allowing you to build data pipelines with just SQL.

#3about 1 minute

Setting up a dbt project and defining data sources

A walkthrough of a dbt project structure shows how to define raw data sources and their associated tests using a `sources.yml` file.

#4about 2 minutes

Tracking data changes over time with dbt snapshots

The `dbt snapshot` command provides a simple way to capture historical changes in your source data by creating slowly changing dimension tables.

#5about 3 minutes

Using seeds for static data and running models

Use `dbt seed` to load small, static datasets like country codes and `dbt run` to execute SQL models, which can be modularized with Jinja macros.

#6about 3 minutes

Generating documentation and visualizing data lineage

dbt automatically generates a web-based documentation site from your project's metadata, including a complete, interactive data lineage graph.

#7about 1 minute

Implementing and running data quality tests

The `dbt test` command executes predefined and custom SQL-based tests to ensure data integrity and quality throughout your pipeline.

#8about 4 minutes

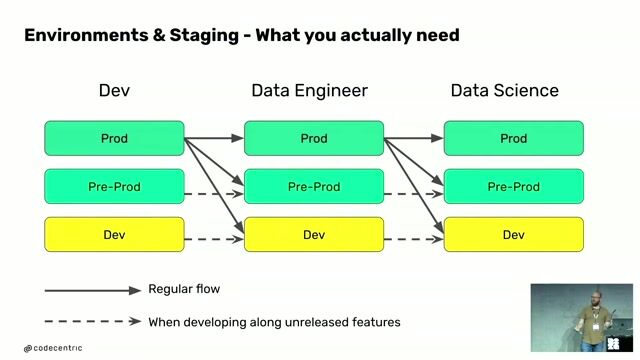

Applying software engineering practices to data pipelines

dbt integrates with standard developer tools like pre-commit hooks for linting, CI/CD for automated testing, and profiles for managing environments.

#9about 4 minutes

Exploring the dbt ecosystem and key integrations

The dbt ecosystem includes packages for extended testing, orchestration tools like Airflow, visualization layers like Lightdash, and integrations with analytical databases like DuckDB.

#10about 1 minute

Addressing the extraction and loading phases of ELT

While dbt focuses on transformation, tools like Airbyte, Fivetran, or custom scripts are used to handle the initial extraction and loading of data into the warehouse.

#11about 2 minutes

Understanding dbt's core benefits and limitations

dbt excels at simplifying data transformation with code-based practices but is not a tool for data ingestion, a full data catalog, or a no-code solution.

#12about 1 minute

Q&A: Raw data formats and comparing dbt to Spark

Answering audience questions clarifies the strategy of loading raw data as-is and positions dbt as a simpler, SQL-focused alternative to complex systems like Apache Spark.

Related jobs

Jobs that call for the skills explored in this talk.

envelio

Köln, Germany

Remote

Senior

Python

Software Architecture

Matching moments

05:00 MIN

Using the Modern Data Stack and DBT for transformations

Modern Data Architectures need Software Engineering

03:02 MIN

A DBA's journey to running SQL Server on Kubernetes

Adjusting Pod Eviction Timings in Kubernetes

02:12 MIN

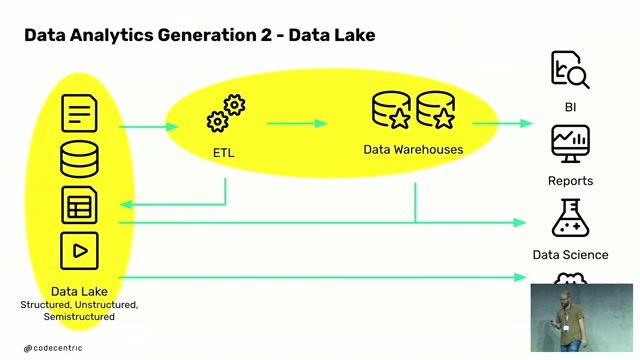

Understanding the modern cloud data platform

Modern Data Architectures need Software Engineering

01:55 MIN

Merging data engineering and DevOps for scalability

Software Engineering Social Connection: Yubo’s lean approach to scaling an 80M-user infrastructure

02:41 MIN

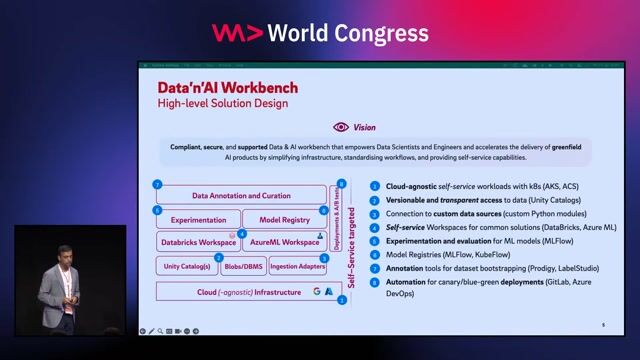

Building a self-service data and AI workbench

Beyond GPT: Building Unified GenAI Platforms for the Enterprise of Tomorrow

05:05 MIN

Using DataWorks as a unified IDE for big data

Alibaba Big Data and Machine Learning Technology

03:59 MIN

Modern data architectures and the reality of team size

Modern Data Architectures need Software Engineering

05:13 MIN

Live demo of data analysis with DataWorks

Alibaba Big Data and Machine Learning Technology

Featured Partners

Related Videos

30:34

30:34How building an industry DBMS differs from building a research one

Markus Dreseler

29:25

29:25Modern Data Architectures need Software Engineering

Matthias Niehoff

43:47

43:47Industrializing your Data Science capabilities

Dubravko Dolic & Hüdaverdi Cakir

39:04

39:04Python-Based Data Streaming Pipelines Within Minutes

Bobur Umurzokov

24:22

24:22Database Magic behind 40 Million operations/s

Jürgen Pilz

39:35

39:35Automated MS SQL Server database deployments with dacpacs and Azure DevOps

Sebastian Wolff

39:15

39:15Database DevOps with Containers

Rob Richardson

48:10

48:10Data Science on Software Data

Markus Harrer

Related Articles

View all articles

.gif?w=240&auto=compress,format)

From learning to earning

Jobs that call for the skills explored in this talk.

Devoteam

Canton de Levallois-Perret, France

Senior

Python

Deutsche Wohnen AG

Berlin, Germany

Remote

Azure

T-SQL

Python

Data Lake

+4

La Collective

Canton de Nantes-1, France

Remote

Intermediate

GIT

Python

Data analysis

Continuous Integration

Smart Future Campus GmbH

Bernau bei Berlin, Germany

ETL

JSON

Azure

NoSQL

Data analysis

Smart Future Campus GmbH

Berlin, Germany

ETL

JSON

Azure

NoSQL

Data analysis

Smart Future Campus GmbH

Dresden, Germany

ETL

JSON

Azure

NoSQL

Scrum

+1

Smart Future Campus GmbH

Darmstadt, Germany

Remote

ETL

JSON

Azure

NoSQL

+2

Smart Future Campus GmbH

Sankt Augustin, Germany

ETL

JSON

Azure

NoSQL

Data analysis

Smart Future Campus GmbH

Dülmen, Germany

ETL

JSON

Azure

NoSQL

Data analysis