Thomas Schmidt

AI Factories at Scale

#1about 2 minutes

The history and origins of the AI company Amber

Amber's journey began in 2006 as Fluid Dyna, an early Nvidia partner for GPU-accelerated code, before being acquired by Altair and later re-established as an independent AI infrastructure company.

#2about 3 minutes

Key milestones in the evolution of AI and GPU computing

The release of CUDA, AlexNet, and Transformers led to an exponential increase in compute demand, culminating in the public adoption of AI with ChatGPT.

#3about 2 minutes

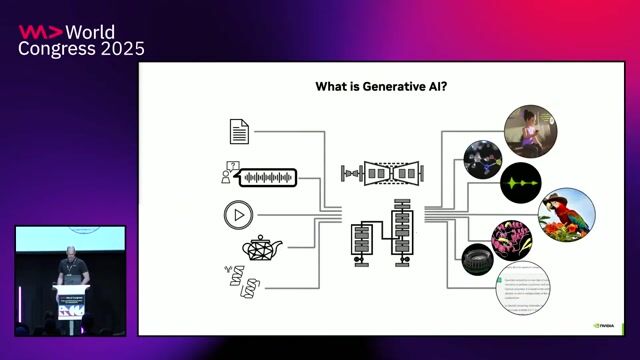

Understanding the business impact and adoption of generative AI

Generative AI presents a massive business opportunity with a high return on investment, driving rapid adoption across major enterprises.

#4about 1 minute

Comparing supercomputer hardware from the past decade

A modern Nvidia DGX H100 system vastly outperforms a state-of-the-art supercomputer from a decade ago while consuming only a fraction of the power and space.

#5about 2 minutes

Why modern GPUs are more energy efficient than CPUs

Replacing legacy CPU-based systems with modern GPUs can reduce energy consumption by up to 98%, and newer GPU generations like Blackwell offer a 4x power reduction over previous models for the same task.

#6about 2 minutes

The shift to production will cause an explosion in compute demand

As generative AI moves from experimentation to production, the demand for compute resources is expected to increase by at least 8 to 10 times, driven primarily by inference workloads.

#7about 3 minutes

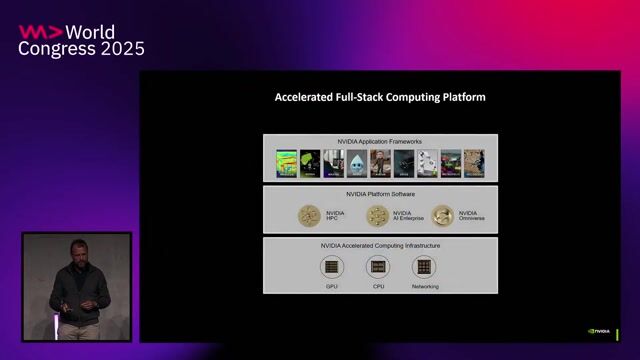

Building an AI factory with all the essential components

A successful AI factory requires more than just GPUs; it needs a holistic approach including specialized storage, high-speed networking, management software, and robust data center infrastructure.

#8about 5 minutes

Key software considerations for managing an AI cluster

Effective AI cluster management requires software for optimizing the stack, synchronizing images, monitoring health and performance, integrating with the cloud, and providing chargeback reporting.

#9about 1 minute

Why specialized high-performance storage is critical for AI

AI workloads demand specialized, high-performance storage to handle tasks like rapid LLM checkpointing and high I/O for inference, making legacy storage solutions inadequate.

#10about 3 minutes

Future trends in AI models and data center cooling

The future of AI involves both small specialized models and large general models, driving a necessary evolution in data centers towards direct liquid and immersion cooling to manage heat.

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

Matching moments

01:37 MIN

Introduction to large-scale AI infrastructure challenges

Your Next AI Needs 10,000 GPUs. Now What?

04:13 MIN

Addressing the growing power consumption of AI computing

The Future of Computing: AI Technologies in the Exascale Era

02:06 MIN

Managing AI's energy consumption with sustainable infrastructure

How to build a sovereign European AI compute infrastructure

03:27 MIN

Why AI giga factories are not enough for sovereignty

AI Sovereignty: What Does It Take?

01:40 MIN

The rise of general-purpose GPU computing

Accelerating Python on GPUs

03:25 MIN

Achieving massive energy efficiency in AI compute

Pioneering AI Assistants in Banking

01:30 MIN

Overlooked challenges of running AI applications in production

Chatbots are going to destroy infrastructures and your cloud bills

05:50 MIN

The future of computing requires scaling out to data centers

Coffee with Developers - Stephen Jones - NVIDIA

Featured Partners

Related Videos

29:52

29:52Your Next AI Needs 10,000 GPUs. Now What?

Anshul Jindal & Martin Piercy

26:25

26:25Bringing AI Everywhere

Stephan Gillich

22:07

22:07WWC24 - Ankit Patel - Unlocking the Future Breakthrough Application Performance and Capabilities with NVIDIA

Ankit Patel

35:16

35:16How AI Models Get Smarter

Ankit Patel

30:46

30:46The Future of Computing: AI Technologies in the Exascale Era

Stephan Gillich, Tomislav Tipurić, Christian Wiebus & Alan Southall

29:36

29:36How to build a sovereign European AI compute infrastructure

Markus Hacker, Daniel Abbou, Rosanne Kincaid-Smith & Michael Bradley

24:07

24:07Beyond the Hype: Real-World AI Strategies Panel

Mike Butcher, Jürgen Müller, Katrin Lehmann & Tobias Regenfuss

29:50

29:50Trends, Challenges and Best Practices for AI at the Edge

Ekaterina Sirazitdinova

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Genpact's Ai Gigafactory

GIT

Azure

Keras

Kafka

Python

+7

Amazon.com Inc.

The Hague, Netherlands

Data analysis