Michael Mueller

Minimising the Carbon Footprint of Workloads

#1about 2 minutes

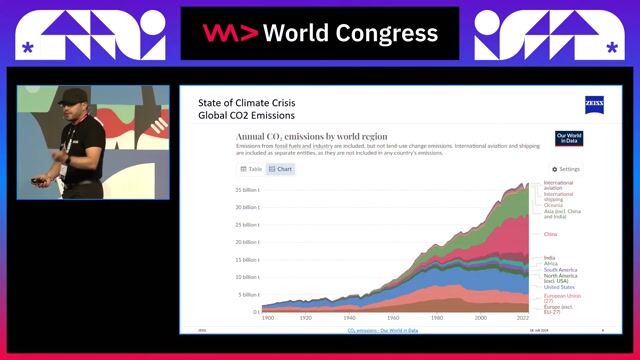

The growing carbon footprint of the IT industry

The IT sector contributes 4-5% of global carbon emissions, a figure larger than the aviation industry and projected to triple.

#2about 3 minutes

How AI workloads accelerate energy consumption

Both training large models like Llama 3 and running inference for services like OpenAI consume massive amounts of energy, driving up emissions for major tech companies.

#3about 3 minutes

Emerging regulations for data center efficiency

Governments are beginning to regulate data center energy use and grid strain, but a general lack of awareness and transparent data from providers hinders progress.

#4about 5 minutes

Key concepts for sustainable computing

Understanding server energy proportionality, Power Usage Effectiveness (PUE), and the embedded carbon from hardware manufacturing are foundational to reducing IT's environmental impact.

#5about 3 minutes

Practical strategies to reduce workload emissions

Simple but effective measures like eliminating zombie servers, right-sizing instances, using auto-scaling, and adopting ARM CPUs can significantly lower carbon emissions and costs.

#6about 1 minute

Tools for measuring energy and carbon emissions

Open-source tools like Kepler for Kubernetes and Scaphandre for Linux can measure energy consumption, which can then be converted to carbon emissions data.

#7about 2 minutes

Tracking emissions with Software Carbon Intensity (SCI)

The Software Carbon Intensity (SCI) ISO standard provides a formula to create a score for your application, which can be used as an SLO to prevent regressions in your CI/CD pipeline.

#8about 4 minutes

Using carbon awareness to shift workloads

By understanding real-time grid carbon intensity, you can time-shift batch jobs to sunnier hours or region-shift development workloads to greener data centers.

#9about 3 minutes

Case study on optimizing a GKE cluster

An experiment deploying a microservices application on GKE demonstrates that tuning default resource requests and enabling auto-scaling leads to optimal server utilization and lower energy use.

#10about 2 minutes

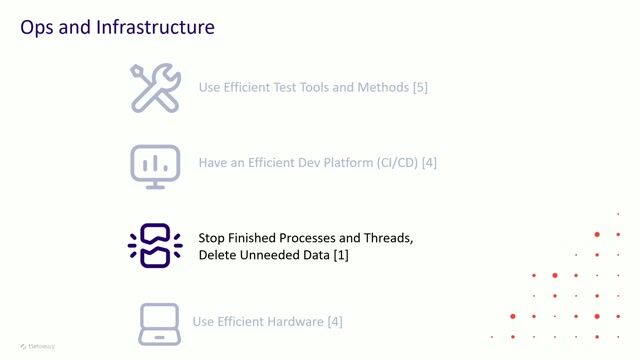

Green coding and on-premise optimization strategies

For on-premise environments, consolidate workloads to power down unused nodes, and at the software level, focus on profiling to find and fix inefficiencies rather than just changing programming languages.

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

envelio

Köln, Germany

Remote

Senior

Python

Software Architecture

Matching moments

03:37 MIN

Principle 3: Leveraging carbon intensity and demand shifting

Green Cloud Computing

04:05 MIN

The scale and impact of IT's carbon footprint

Introducing Green IT practices to a large Software Company

02:12 MIN

Understanding the principles of green cloud computing

Green Cloud Computing

01:11 MIN

The future of software development is resource efficient

A Hitchhiker's Guide to Resource Efficient Software

14:47 MIN

Architectural choices for reducing your carbon footprint

An Architect’s guide to reducing the carbon footprint of your applications

02:06 MIN

Managing AI's energy consumption with sustainable infrastructure

How to build a sovereign European AI compute infrastructure

01:21 MIN

Optimizing data storage and hardware lifecycle

11 Tips for Greener Code

05:24 MIN

Major initiatives driving green software development

It's not easy being green

Featured Partners

Related Videos

29:30

29:30A Hitchhiker's Guide to Resource Efficient Software

Hendrik Lösch

29:31

29:31Introducing Green IT practices to a large Software Company

Pierre-Luc Noel & Fritz Reichmann

29:07

29:07Times of (climate) crisis - How and why sustainable software is a must!

Hendrik Lösch

09:23

09:2311 Tips for Greener Code

Kent Simonsen

33:01

33:01What can I do about climate change as a developer... and a human being?

Julien Lengrand-Lambert

55:18

55:18An Architect’s guide to reducing the carbon footprint of your applications

Ricardo Sueiras Sueiras

30:07

30:07Reducing the carbon footprint of your website

Ines Akrap

1:02:22

1:02:22It's not easy being green

Marjolein Pordon

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Michael Bailey Associates

Bern, Switzerland

API

DevOps

Gitlab

Docker

Ansible

+6

Vertiv

Grafana

Terraform

Prometheus

Kubernetes

Autodesk

Parsberg, Germany

API

CSS

Python

JavaScript

TypeScript

+2

Schwarz Dienstleistung KG

Raum Weinsberg GVV, Germany

Remote

Vue.js

Kotlin

Kubernetes

Agile Methodologies

März Internetwork Services AG

Berlin, Germany

Azure

VMware

Amazon Web Services (AWS)

Siemens AG

Berlin, Germany

C++

GIT

CMake

Linux

DevOps

+7

Digital Performance GmbH

Hamburg, Germany

Remote

Senior

Bash

Azure

Linux

DevOps

+12

Schwarz Dienstleistung KG

Raum Weinsberg GVV, Germany

API

Vue.js

JavaScript

TypeScript

Kubernetes

+1

Michael Page International (Deutschland) GmbH

Frankfurt am Main, Germany

Azure

Terraform

Kubernetes