Lina Weichbrodt

Is my AI alive but brain-dead? How monitoring can tell you if your machine learning stack is still performing

#1about 2 minutes

Why defining the business problem is crucial for monitoring

Machine learning projects often have vague requirements, making it essential to define success KPIs before implementing monitoring.

#2about 3 minutes

A real-world use case for loan rejection prediction

A machine learning model is used to predict loan application rejections upfront, saving significant monthly costs from credit agency queries.

#3about 3 minutes

Using precision and recall for model training

Precision and recall are chosen as the key metrics to balance the model's accuracy in predicting rejections against the volume of applications it can identify.

#4about 2 minutes

Choosing gradient boosted trees for tabular data

Gradient boosted trees are selected over deep learning for this tabular data problem because they offer comparable performance with much faster training times.

#5about 2 minutes

Using existing tools like Grafana for ML monitoring

You can leverage your existing software monitoring stack like Grafana and Prometheus for machine learning, which is often sufficient and avoids adopting immature tools.

#6about 6 minutes

Monitoring model outcomes with a holdout set

When the true outcome is unknown due to model intervention, a holdout set of live traffic is used to calculate production metrics like precision and recall.

#7about 3 minutes

Translating stakeholder fears into monitoring signals

Address stakeholder concerns by identifying their worst-case scenarios and creating specific metrics to monitor and alert on those potential issues.

#8about 4 minutes

Monitoring the model's response distribution for drift

Track the distribution of model outputs over time using statistical distance metrics like the D1 distance to detect shifts that indicate a problem.

#9about 2 minutes

Creating quality heuristics as sanity checks

Develop simple, human-understandable heuristics, such as the average rank of a user's favorite item, to serve as an intuitive quality indicator.

#10about 2 minutes

Monitoring input data to detect training-serving skew

Compare the distribution of input features between the training environment and live production to identify and debug training-serving skew.

#11about 4 minutes

Key takeaways for practical machine learning monitoring

Monitoring in production focuses on detecting problems with indicator KPIs, not measuring absolute quality, and can be done by working backwards from business impact.

#12about 15 minutes

Q&A on career paths and delayed outcomes

The Q&A session covers topics such as career entry points into machine learning, handling delayed outcomes in business processes, and stakeholder communication.

Related jobs

Jobs that call for the skills explored in this talk.

CARIAD

Berlin, Germany

Junior

Intermediate

Python

C++

+1

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

Matching moments

06:10 MIN

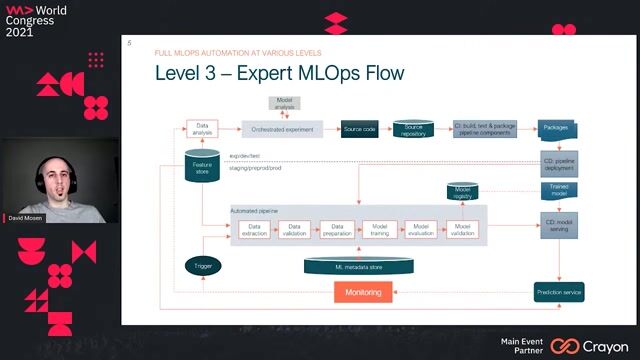

Monitoring model performance and handling concept drift

DevOps for Machine Learning

04:18 MIN

Ensuring AI reliability with monitoring and data governance

Navigating the AI Revolution in Software Development

04:17 MIN

Evaluating the state of current monitoring solutions

Deployed ML models need your feedback too

02:47 MIN

The challenge of operationalizing production machine learning systems

Model Governance and Explainable AI as tools for legal compliance and risk management

03:01 MIN

Common challenges in developing machine learning applications

Data Fabric in Action - How to enhance a Stock Trading App with ML and Data Virtualization

01:07 MIN

The hidden complexity of the machine learning lifecycle

DataForce Studio

07:46 MIN

Exploring the different layers of model monitoring

Deployed ML models need your feedback too

02:06 MIN

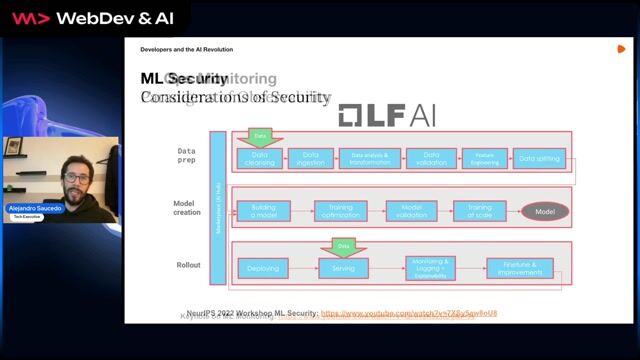

The rise of MLOps and AI security considerations

MLOps and AI Driven Development

Featured Partners

Related Videos

37:24

37:24Deployed ML models need your feedback too

David Mosen

47:19

47:19The state of MLOps - machine learning in production at enterprise scale

Bas Geerdink

29:37

29:37Detecting Money Laundering with AI

Stefan Donsa & Lukas Alber

34:21

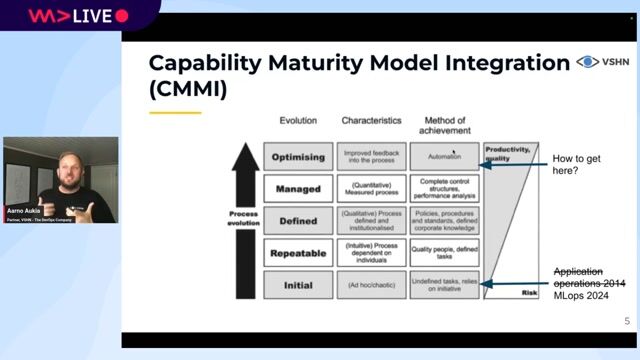

34:21DevOps for AI: running LLMs in production with Kubernetes and KubeFlow

Aarno Aukia

41:45

41:45From Traction to Production: Maturing your LLMOps step by step

Maxim Salnikov

35:16

35:16How AI Models Get Smarter

Ankit Patel

57:46

57:46Overview of Machine Learning in Python

Adrian Schmitt

45:45

45:45Effective Machine Learning - Managing Complexity with MLOps

Simon Stiebellehner

Related Articles

View all articles.gif?w=240&auto=compress,format)

.gif?w=240&auto=compress,format)

From learning to earning

Jobs that call for the skills explored in this talk.

Artificial Labs Ltd

Charing Cross, United Kingdom

Python

PyTorch

TensorFlow

Machine Learning

Ernst & Young GmbH

Berlin, Germany

DevOps

Machine Learning

Client Server

Newcastle upon Tyne, United Kingdom

£85K

Python

Machine Learning

Depot

Charing Cross, United Kingdom

Remote

Azure

Python

PyTorch

PySpark

+3