MIlan Todorović

Harnessing Apple Intelligence: Live Coding with Swift for iOS

#1about 2 minutes

Introducing Apple Intelligence and its privacy-first approach

Apple Intelligence unifies all of Apple's AI frameworks under a single name, emphasizing on-device processing to protect user privacy.

#2about 6 minutes

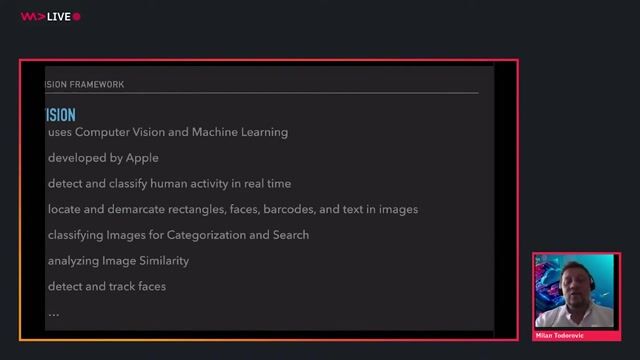

Exploring Apple's core machine learning frameworks

A tour of key Apple frameworks like Core ML, Vision, NLP, and Create ML shows how they enable on-device machine learning capabilities.

#3about 4 minutes

Understanding the capabilities of Apple Intelligence

Apple Intelligence introduces powerful system-wide features including writing tools, image generation, an enhanced Siri, and the game-changing App Intents framework.

#4about 12 minutes

Live coding an on-device LLM app with Swift

Build a simple iOS application from scratch using Swift, SwiftUI, and the new Foundation Models framework to interact with an on-device LLM.

#5about 1 minute

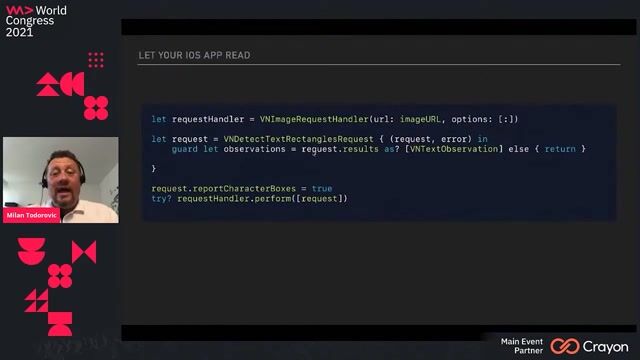

Using the on-device model for text extraction

A practical demonstration shows how the on-device LLM can perform complex tasks like extracting specific entities, such as personal names, from a large block of text.

#6about 4 minutes

Exploring the Foundation Models documentation and opportunities

A review of the official documentation highlights how to configure and use the LanguageModelSession, followed by encouragement for developers to harness these new AI capabilities.

Related jobs

Jobs that call for the skills explored in this talk.

Matching moments

38:07 MIN

Exploring the future of AI beyond simple code generation

Innovating Developer Tools with AI: Insights from GitHub Next

19:45 MIN

Shaping the future of AI in software development

Developer Experience in the Age of AI

09:55 MIN

Shifting from traditional code to AI-powered logic

WWC24 - Ankit Patel - Unlocking the Future Breakthrough Application Performance and Capabilities with NVIDIA

17:41 MIN

Presenting live web scraping demos at a developer conference

Tech with Tim at WeAreDevelopers World Congress 2024

25:33 MIN

AI privacy concerns and prompt engineering

Coffee with Developers - Cassidy Williams -

14:10 MIN

Leveraging open software and AI for code development

The Future of Computing: AI Technologies in the Exascale Era

29:35 MIN

The rise of "vibe coding" and AI-generated products

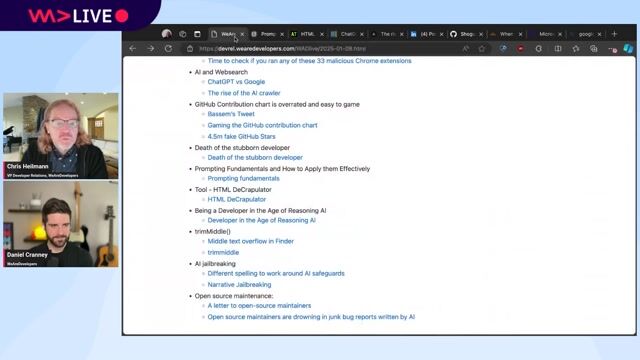

WeAreDevelopers LIVE - the weekly developer show with Chris Heilmann and Daniel Cranney

52:40 MIN

AI's evolving reasoning and thoughtful UI design

WeAreDevelopers Live: Browser Extensions, Honey Scam, Jailbreaking LLMs and more

Featured Partners

Related Videos

31:39

31:39Livecoding with AI

Rainer Stropek

28:51

28:51Detect Hand Pose with Vision

Milan Todorovic

36:34

36:34Let your iOS app read texts

Milan Todorovic

1:09:49

1:09:49Inside the AI Revolution: How Microsoft is Empowering the World to Achieve More

Simi Olabisi

25:17

25:17AI: Superhero or Supervillain? How and Why with Scott Hanselman

Scott Hanselman

23:33

23:33Reimagining app development with Low-code and AI

Adhavan S

26:25

26:25The End of Software as we know it

Paul Adams

31:53

31:53Java Meets AI: Empowering Spring Developers to Build Intelligent Apps

Timo Salm

From learning to earning

Jobs that call for the skills explored in this talk.

AIML -Machine Learning Research, DMLI

Apple

Zürich, Switzerland

Python

PyTorch

TensorFlow

Machine Learning

Natural Language Processing

Machine Learning Engineer, Global Siri

Apple

Barcelona, Spain

Python

PyTorch

TensorFlow

Machine Learning

Natural Language Processing

AIML - Machine Learning Research (Speech), DMLI

Apple Firmenprofil

Aachen, Germany

Confluence

Machine Learning

AIML - ML Researcher, Foundation Models

Apple

Paris, France

Python

PyTorch

TensorFlow

Natural Language Processing