Milan Todorovic

Detect Hand Pose with Vision

#1about 2 minutes

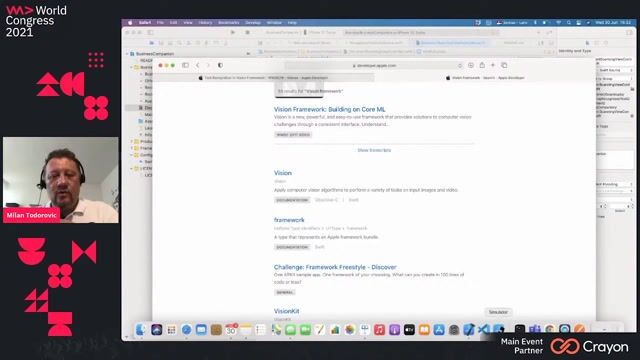

Understanding the capabilities of Apple's Vision framework

The Vision framework provides out-of-the-box machine learning tools for image analysis, including object detection, image classification, and tracking faces.

#2about 3 minutes

Recognizing 21 distinct hand landmarks with Vision

Vision processes hand poses by identifying 21 specific landmarks, including the wrist, palm, and four points on each finger and thumb.

#3about 1 minute

Common issues and limitations in hand pose detection

Hand pose recognition can fail due to common real-world issues like partial occlusion, hands near screen edges, wearing gloves, or confusing hands with feet.

#4about 4 minutes

Exploring the structure of the hand tracking Xcode project

The sample application is built around three main components: a CameraView for display, a ViewController for control logic, and a HandGestureProcessor for analyzing gestures.

#5about 2 minutes

Live demo of a drawing app using pinch gestures

A live demonstration shows how to use the tips of the thumb and index finger to create a pinch gesture that draws lines on the iPhone screen.

#6about 4 minutes

Key classes and properties for implementing hand tracking

The implementation relies on key classes like CameraViewController, VNdetectHumanHandPoseRequest for analysis, and UIBezierPath for drawing the visual feedback.

#7about 5 minutes

Processing hand pose observations from the Vision framework

The VNImageRequestHandler processes the camera buffer and returns observations, from which you can extract the coordinates of specific finger joints like the thumb tip.

#8about 2 minutes

Implementing gesture state logic for pinch detection

A custom processor manages gesture states like 'pinched' or 'apart' by calculating the distance between finger landmarks and using a counter for stability.

#9about 1 minute

Applying similar techniques for human body pose detection

The same principles used for hand pose can be applied to full-body pose detection, which tracks major body joints like shoulders, eyes, and ears.

#10about 3 minutes

Exploring potential applications for pose detection

Pose detection technology can be used to build applications that understand sign language, analyze human interaction in images, or create new forms of user input.

Related jobs

Jobs that call for the skills explored in this talk.

Matching moments

02:20 MIN

Exploring Apple's core machine learning frameworks

Harnessing Apple Intelligence: Live Coding with Swift for iOS

07:12 MIN

Using Vision Camera and frame processors for real-time video

Building Better Apps with React Native

27:25 MIN

Showcasing computer vision project examples

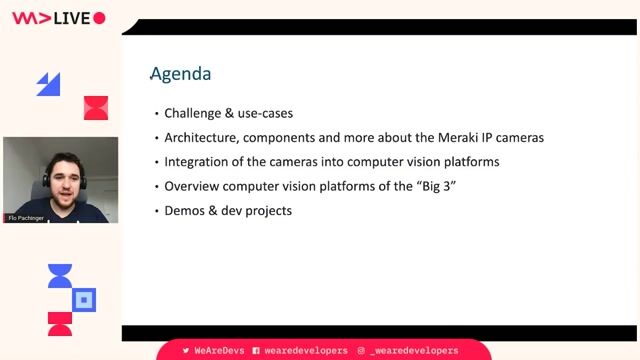

Computer Vision from the Edge to the Cloud done easy

19:24 MIN

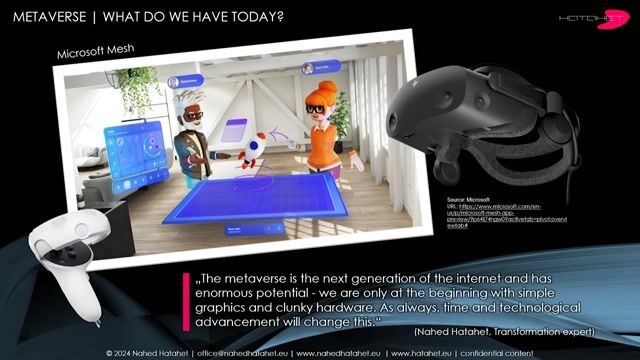

The current state and future of MR hardware

Metaverse, the workplace of the future - Holoportation, the next generation of communication

29:12 MIN

Resources and other capabilities of the Vision framework

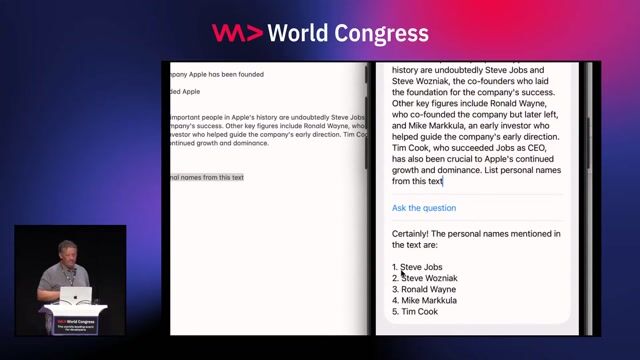

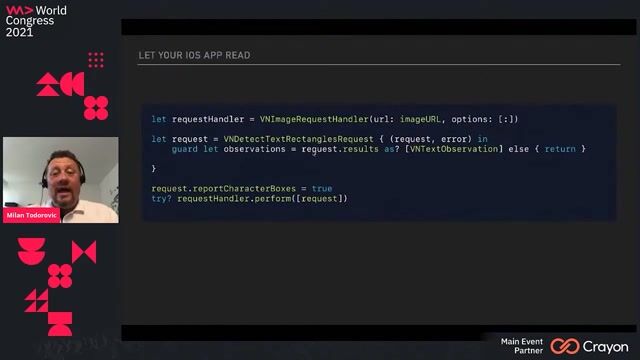

Let your iOS app read texts

23:28 MIN

Apple Vision Pro and the current device landscape

XR Demystified: Separating Facts from Fiction in 2024

24:46 MIN

Exploring the Foundation Models documentation and opportunities

Harnessing Apple Intelligence: Live Coding with Swift for iOS

22:42 MIN

A live demo of a robot's vision and AI brain

Robots 2.0: When artificial intelligence meets steel

Featured Partners

Related Videos

36:34

36:34Let your iOS app read texts

Milan Todorovic

48:21

48:21AR Kit intro - placing 3D objects in a scene and interacting with them in real-time

Nermin Sehic

28:54

28:54Harnessing Apple Intelligence: Live Coding with Swift for iOS

MIlan Todorović

48:38

48:38Computer Vision from the Edge to the Cloud done easy

Flo Pachinger

27:23

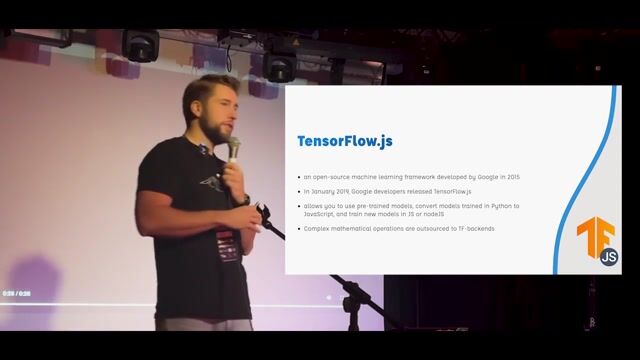

27:23From ML to LLM: On-device AI in the Browser

Nico Martin

42:50

42:50Making the switch from web to mobile development

Milica Aleksic

28:48

28:48Apple Vision Pro: Proven Development Methods Meet the Latest Technology

Mario Petricevic

34:34

34:34Vision for Websites: Training Your Frontend to See

Daniel Madalitso Phiri

From learning to earning

Jobs that call for the skills explored in this talk.

Computer Vision and Machine Learning Engineer - Apple Vision Pro

Apple Inc

Zürich, Switzerland

€80-103K

Senior

C++

NumPy

Python

Pandas

+5

Computer Vision and Artificial Intelligence Developer

Vicomtech

Municipality of Bilbao, Spain

Keras

Python

PyTorch

TensorFlow

Data analysis

+3

AIML -Machine Learning Research, DMLI

Apple

Zürich, Switzerland

Python

PyTorch

TensorFlow

Machine Learning

Natural Language Processing

ML/DevOps Engineer at dynamic AI/ Computer Vision company

Nomitri

Berlin, Germany

C++

Bash

Azure

DevOps

Python

+12

Visionary Fullstack Developer (with ML expertise) - future CTO track

ModaResa

Paris, France

REST

React

Python

NestJS

Node.js

+5

Computer Vision and AI Staff Engineer

GoPro

Canton of Issy-les-Moulineaux, France

Remote

GIT

Python

PyTorch

TensorFlow

+3

Lead AI/ML Engineer (SportsTech - Computer Vision)

Animo Group

Charing Cross, United Kingdom

€63K

Senior

Python

PyTorch

TensorFlow

Computer Vision

+1