Karol Przystalski

Explainable machine learning explained

#1about 2 minutes

The growing importance of explainable AI in modern systems

Machine learning has become widespread, creating a critical need to understand how models make decisions beyond simple accuracy metrics.

#2about 4 minutes

Why regulated industries like medtech and fintech require explainability

In fields like medicine and finance, regulatory compliance and user trust make it mandatory to explain how AI models arrive at their conclusions.

#3about 3 minutes

Identifying the key stakeholders who need model explanations

Explainability is crucial for various roles, including domain experts like doctors, regulatory agencies, business leaders, data scientists, and end-users.

#4about 4 minutes

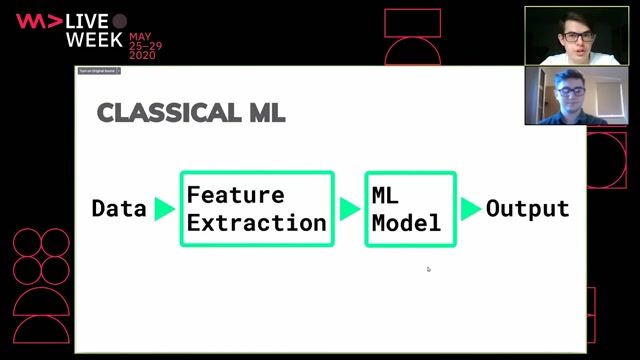

Fundamental approaches for explaining AI model behavior

Models can be explained through various methods such as mathematical formulas, visual charts, local examples, simplification, and analyzing feature relevance.

#5about 5 minutes

Learning from classic machine learning model failures

Examining famous failures, like the husky vs. wolf classification and the Tay chatbot, reveals how models can learn incorrect patterns from biased data.

#6about 5 minutes

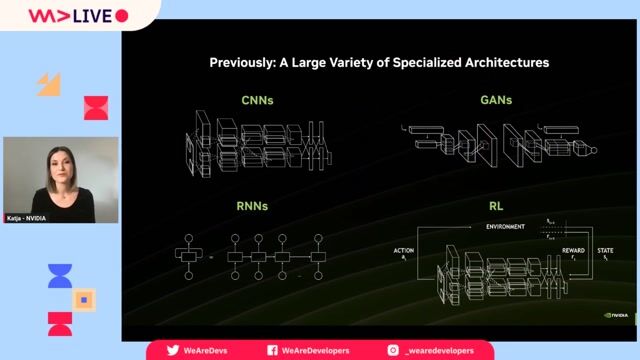

Differentiating between white-box and black-box models

White-box models like decision trees are inherently transparent, whereas black-box models like neural networks require special techniques to interpret their internal workings.

#7about 7 minutes

Improving model performance with data-centric feature engineering

A data-centric approach, demonstrated with the Titanic dataset, shows how creating new features from existing data can significantly boost model accuracy.

#8about 4 minutes

Exploring inherently interpretable white-box models

Models such as logistic regression, k-means, decision trees, and SVMs are considered explainable by design due to their transparent decision-making processes.

#9about 5 minutes

Using methods like LIME and SHAP to explain black-box models

Techniques like Partial Dependence Plots (PDP), LIME, and SHAP are used to understand the influence of features on the predictions of complex black-box models.

#10about 3 minutes

Visualizing deep learning decisions in images with Grad-CAM

Grad-CAM (Gradient-weighted Class Activation Mapping) creates heatmaps to highlight which parts of an image were most influential for a deep neural network's classification.

#11about 3 minutes

Understanding security risks from adversarial attacks on models

Adversarial attacks demonstrate how small, often imperceptible, changes to input data can cause machine learning models to make completely wrong predictions.

Related jobs

Jobs that call for the skills explored in this talk.

Featured Partners

Related Videos

29:30

29:30Model Governance and Explainable AI as tools for legal compliance and risk management

Kilian Kluge & Isabel Bär

35:16

35:16How AI Models Get Smarter

Ankit Patel

32:54

32:54The pitfalls of Deep Learning - When Neural Networks are not the solution

Adrian Spataru & Bohdan Andrusyak

26:55

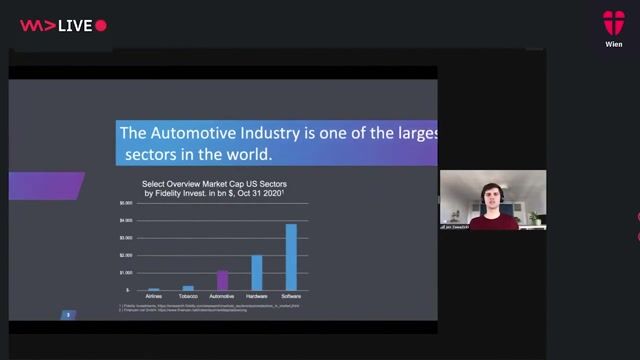

26:55How Machine Learning is turning the Automotive Industry upside down

Jan Zawadzki

56:55

56:55Multimodal Generative AI Demystified

Ekaterina Sirazitdinova

29:37

29:37Detecting Money Laundering with AI

Stefan Donsa & Lukas Alber

30:49

30:49AI beyond the code: Master your organisational AI implementation.

Marin Niehues

27:26

27:26Staying Safe in the AI Future

Cassie Kozyrkov

From learning to earning

Jobs that call for the skills explored in this talk.

Security-by-Design for Trustworthy Machine Learning Pipelines

Association Bernard Gregory

Machine Learning

Continuous Delivery

Senior - AI Engineer

Predictable Machines

Municipality of San Sebastian, Spain

API

GIT

Kotlin

Docker

TypeScript