Rainer Stropek

Develop AI-powered Applications with OpenAI Embeddings and Azure Search

#1about 3 minutes

Understanding embedding vectors as numerical representations

Embedding vectors convert complex concepts like text or personality into multi-dimensional numerical arrays, enabling comparison and clustering.

#2about 7 minutes

Working with the OpenAI embeddings API and cosine similarity

The OpenAI API provides an endpoint to generate a 1,536-dimensional vector for a given text, and vector similarity can be efficiently calculated using a dot product.

#3about 5 minutes

Building custom applications with the OpenAI chat API

The chat completions API allows developers to build custom applications by sending a model the entire chat history, including system prompts and user messages.

#4about 3 minutes

Implementing the Retrieval-Augmented Generation (RAG) pattern

The RAG pattern enhances LLM responses by first retrieving relevant facts from a private knowledge base using vector search and then injecting that context into the prompt.

#5about 4 minutes

Demo overview of building a school wiki assistant

A practical demonstration shows how to build a Q&A assistant for a school's private wiki using a crawler, an indexer, and a query application.

#6about 8 minutes

Step 1: Crawling and pre-processing the source data

The first step in the RAG pipeline involves building a custom crawler to extract, clean, and convert source data into a usable format like Markdown.

#7about 6 minutes

Step 2: Indexing embeddings into a vector database

An indexer application iterates through pre-processed documents, calculates their embeddings via the OpenAI API, and stores them in Azure Cognitive Search for fast retrieval.

#8about 5 minutes

Step 3: Querying the system using the RAG pattern

The query application generates an embedding for the user's question, performs a vector search to find relevant documents, and injects them into a system prompt for the LLM.

#9about 5 minutes

Live demonstration of the wiki Q&A assistant

The command-line assistant successfully answers specific questions about school policies by retrieving information from the wiki, even handling multi-language queries.

#10about 13 minutes

Q&A on embedding calculation, ethics, and tooling

The speaker answers audience questions about how embeddings are calculated, ensuring answer correctness, responsible AI development, and recommended developer tools.

Related jobs

Jobs that call for the skills explored in this talk.

CARIAD

Berlin, Germany

Junior

Intermediate

Python

C++

+1

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

Matching moments

09:22 MIN

Exploring Microsoft's Azure AI services and tools

Inside the AI Revolution: How Microsoft is Empowering the World to Achieve More

10:49 MIN

Live demo of building a chat with your data app

Inside the AI Revolution: How Microsoft is Empowering the World to Achieve More

04:24 MIN

A practical walkthrough of the Azure AI Foundry playground

How Mixed Reality, Azure AI and Drones turned me into a Magician?

04:47 MIN

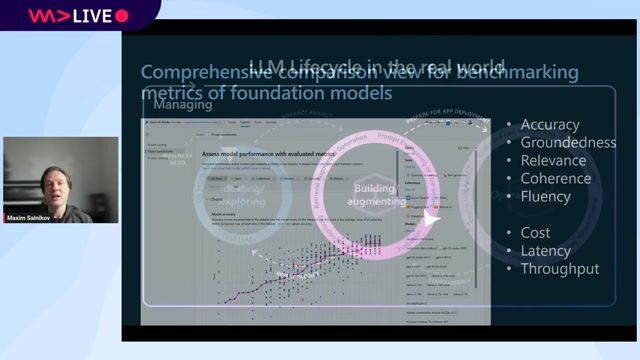

Building applications with RAG and Azure Prompt Flow

From Traction to Production: Maturing your LLMOps step by step

03:15 MIN

The new AI engineer role and the RAG pipeline

Chatbots are going to destroy infrastructures and your cloud bills

06:50 MIN

Showcasing real-time AI application examples

Convert batch code into streaming with Python

04:45 MIN

Understanding the core components of a GenAI stack

Building Products in the era of GenAI

01:06 MIN

Moving beyond hype with real-world generative AI

Semantic AI: Why Embeddings Might Matter More Than LLMs

Featured Partners

Related Videos

26:25

26:25Building Blocks of RAG: From Understanding to Implementation

Ashish Sharma

58:06

58:06From Syntax to Singularity: AI’s Impact on Developer Roles

Anna Fritsch-Weninger

56:55

56:55Multimodal Generative AI Demystified

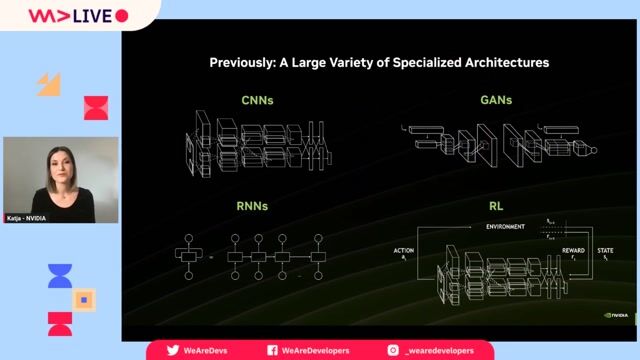

Ekaterina Sirazitdinova

59:38

59:38What comes after ChatGPT? Vector Databases - the Simple and powerful future of ML?

Erik Bamberg

58:00

58:00Creating Industry ready solutions with LLM Models

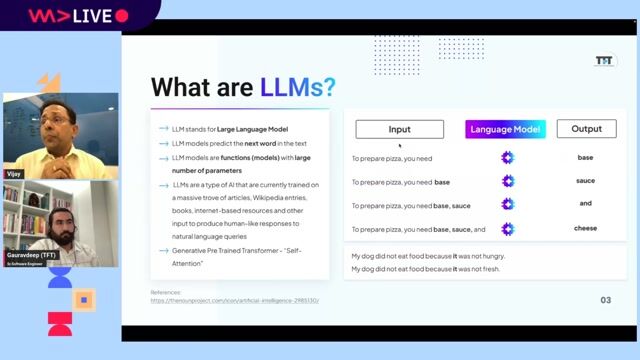

Vijay Krishan Gupta & Gauravdeep Singh Lotey

1:09:49

1:09:49Inside the AI Revolution: How Microsoft is Empowering the World to Achieve More

Simi Olabisi

24:24

24:24Best practices: Building Enterprise Applications that leverage GenAI

Damir

27:12

27:12GenAI Unpacked: Beyond Basic

Damir

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

LinkiT

Amsterdam, Netherlands

Azure

DevOps

Python

PySpark

Terraform

+2

The Rolewe

Charing Cross, United Kingdom

API

Python

Machine Learning

Power Reply GmbH & Co. KG

München, Germany

Remote

API

Java

Rust

Azure

+12

GCS Ltd

Charing Cross, United Kingdom

Remote

Azure

Python

Docker

Terraform

+4

Agenda GmbH

Raubling, Germany

Remote

Intermediate

API

Azure

Python

Docker

+10

Genpact's Ai Gigafactory

GIT

Azure

Keras

Kafka

Python

+7