Marcy Charollois

Racism fuels users experiences : why you should understand the strength of biased narratives.

#1about 3 minutes

Personal experiences reveal the harm of racial bias

A personal narrative illustrates how being categorized by race can be a dehumanizing experience from childhood into professional life.

#2about 4 minutes

Challenging the "neutral" default in user experience

The concept of a "neutral" user experience is often a white-centric default, as shown by examples like bandaids and an inclusive luggage design project.

#3about 2 minutes

Adding an ethical layer to core UX goals

Standard UX goals like functionality and usability must be expanded to include ethical responsibility to move beyond a white-driven product mindset.

#4about 4 minutes

Using cognitive biases to improve user representation

Understanding the "norm" and leveraging cognitive biases like the peak-end rule helps strategically place diverse representations where they will be most memorable.

#5about 2 minutes

How facial recognition fails diverse cultural expressions

Technology like Face ID often fails to recognize people with traditional tattoos like the Māori Tā moko, treating them as errors due to biased datasets.

#6about 3 minutes

Addressing the legacy of slavery in tech

The historical context of slavery reveals why language like "master/slave" is harmful and how dehumanizing biases reappear in algorithms that misidentify Black people.

#7about 3 minutes

Practical design tactics for global inclusivity

Inclusive design requires practical considerations like offering diverse emoji skin tones, accommodating various name formats, and supporting different reading directions through internationalization.

#8about 2 minutes

Building inclusive design systems and user research practices

Create your own inclusive design system and conduct user research with empathy by prioritizing consent, cultural appropriateness, and data vulnerability.

#9about 5 minutes

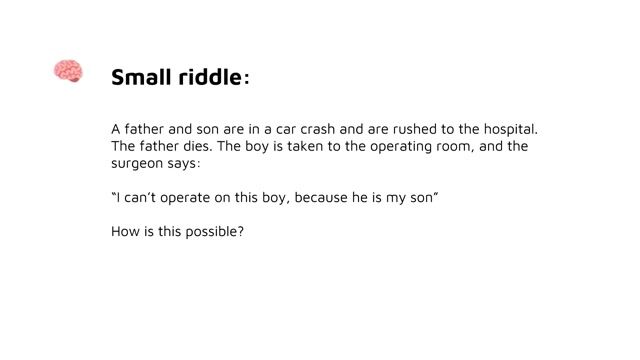

Confronting systemic racism's impact on algorithms

To build anti-racist tech, we must question our privileges and recognize how systemic biases lead to harmful outcomes in healthcare and recruiting algorithms.

Related jobs

Jobs that call for the skills explored in this talk.

Featured Partners

Related Videos

14:29

14:29Insight into AI-Driven Design

Madalena Costa

28:39

28:39It’s easy to create a good looking product, but what about a useful one?

Eleftheria Batsou

23:05

23:05Design patterns for neurodiversity and mental health

Mina Nabinger

59:00

59:00One size fits all! Not at all!

Ixchel Ruiz

30:32

30:32Algorithmic Bias- Preventing Unfairness in your Algorithms

Prathyusha Charagondla

27:01

27:01UX is a fullstack job!

Marcel Bagemihl & Miriam Becker

26:57

26:57Hiring Talent in Tech without Unconscious Bias

Inna Busnita

21:13

21:13Breaking the bias in Tech

Anna John

From learning to earning

Jobs that call for the skills explored in this talk.