Anshul Jindal

LLMOps-driven fine-tuning, evaluation, and inference with NVIDIA NIM & NeMo Microservices

#1about 6 minutes

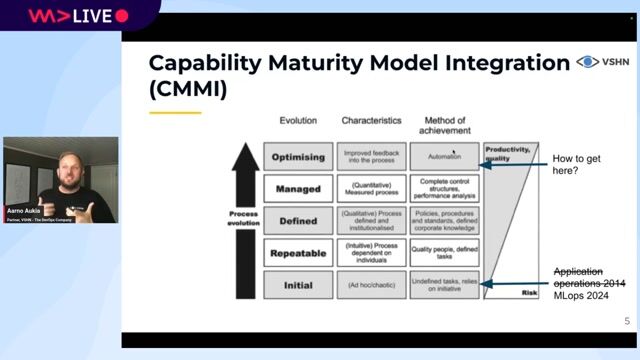

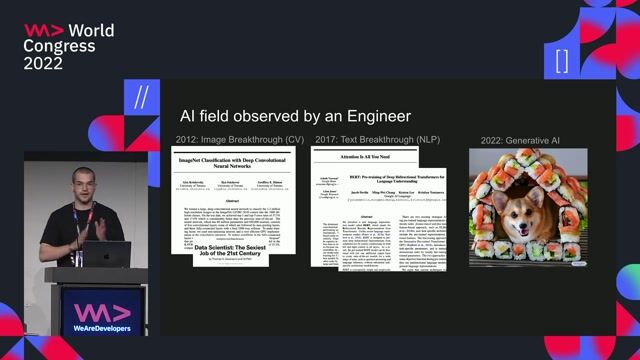

Understanding the GenAI lifecycle and its operational challenges

The continuous cycle of data processing, model customization, and deployment for GenAI applications creates production complexities like a lack of standardized CI/CD and versioning.

#2about 2 minutes

Breaking down the structured stages of an LLMOps pipeline

An effective LLMOps process moves a model from an experimental proof-of-concept through evaluation, pre-production testing, and finally to a production environment.

#3about 4 minutes

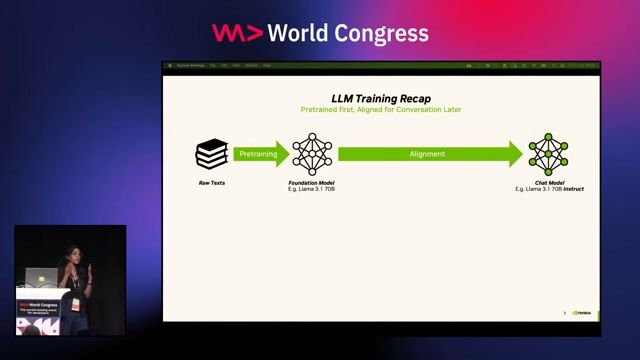

Introducing the NVIDIA NeMo microservices and ecosystem tools

NVIDIA provides a suite of tools including NeMo Curator, Customizer, Evaluator, and NIM, which integrate with ecosystem components like Argo Workflows and Argo CD for a complete LLMOps solution.

#4about 4 minutes

Using NeMo Customizer and Evaluator for model adaptation

NeMo Customizer and Evaluator simplify model adaptation through API requests that trigger fine-tuning on custom datasets and benchmark the resulting model's performance.

#5about 3 minutes

Deploying and scaling models with NVIDIA NIM on Kubernetes

NVIDIA NIM packages models into optimized inference containers that can be deployed and auto-scaled on Kubernetes using the NIM operator, with support for multiple fine-tuned adapters.

#6about 4 minutes

Automating complex LLM workflows with Argo Workflows

Argo Workflows enables the creation of automated, multi-step pipelines by stitching together containerized tasks for data processing, model customization, evaluation, and deployment.

#7about 3 minutes

Implementing a GitOps approach for end-to-end LLMOps

Using Git as the single source of truth, Argo CD automates the deployment and management of all LLMOps components, including microservices and workflows, onto Kubernetes clusters.

#8about 3 minutes

Demonstrating the automated LLMOps pipeline in action

A practical demonstration shows how Argo CD manages deployed services and how a data scientist can launch a complete fine-tuning workflow through the Argo Workflows UI, with results tracked in MLflow.

Related jobs

Jobs that call for the skills explored in this talk.

ROSEN Technology and Research Center GmbH

Osnabrück, Germany

Senior

TypeScript

React

+3

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

Matching moments

03:02 MIN

Defining LLMOps and understanding its core benefits

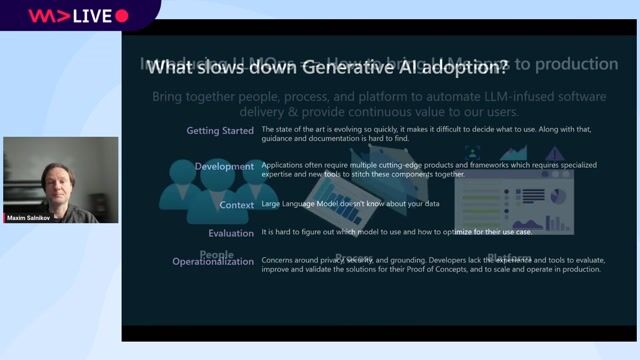

From Traction to Production: Maturing your LLMOps step by step

06:54 MIN

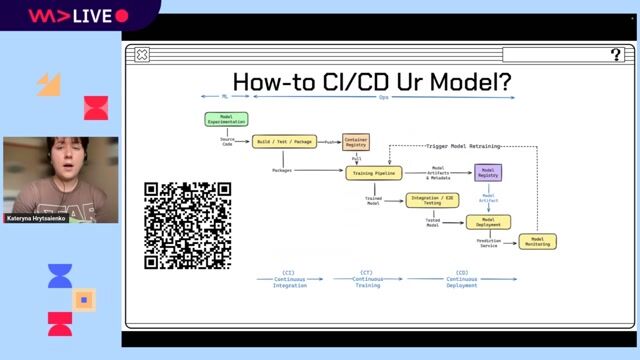

Implementing a CI/CD pipeline for your NLP model

Multilingual NLP pipeline up and running from scratch

01:33 MIN

The convergence of ML and DevOps in MLOps

AI Model Management Life Circles: ML Ops For Generative AI Models From Research to Deployment

04:56 MIN

What MLOps is and the engineering challenges it solves

MLOps - What’s the deal behind it?

03:30 MIN

Introducing NVIDIA NIM for simplified LLM deployment

Efficient deployment and inference of GPU-accelerated LLMs

02:49 MIN

Q&A: MLOps tools for building CI/CD pipelines

Data Science in Retail

03:08 MIN

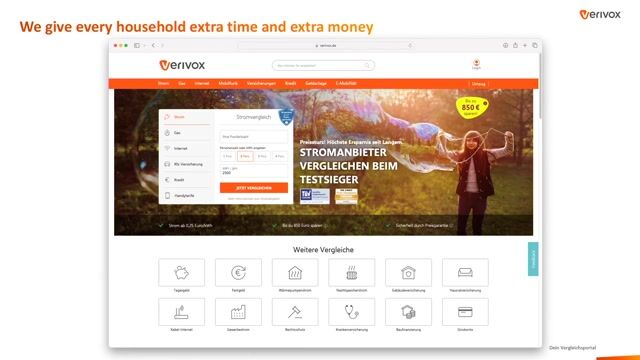

Understanding the role and challenges of MLOps

The Road to MLOps: How Verivox Transitioned to AWS

02:50 MIN

Understanding the core principles and lifecycle of MLOps

MLOps on Kubernetes: Exploring Argo Workflows

Featured Partners

Related Videos

34:21

34:21DevOps for AI: running LLMs in production with Kubernetes and KubeFlow

Aarno Aukia

23:01

23:01Efficient deployment and inference of GPU-accelerated LLMs

Adolf Hohl

25:35

25:35Adding knowledge to open-source LLMs

Sergio Perez & Harshita Seth

41:45

41:45From Traction to Production: Maturing your LLMOps step by step

Maxim Salnikov

31:02

31:02MLOps on Kubernetes: Exploring Argo Workflows

Hauke Brammer

29:24

29:24MLOps - What’s the deal behind it?

Nico Axtmann

26:47

26:47The State of GenAI & Machine Learning in 2025

Alejandro Saucedo

30:04

30:04Self-Hosted LLMs: From Zero to Inference

Roberto Carratalá & Cedric Clyburn

Related Articles

View all articles.gif?w=240&auto=compress,format)

.gif?w=240&auto=compress,format)

.gif?w=240&auto=compress,format)

From learning to earning

Jobs that call for the skills explored in this talk.

Odido

The Hague, Netherlands

Intermediate

API

Azure

Flask

Python

Docker

+3

In Space BV

Eindhoven, Netherlands

Remote

€5-7K

Senior

Continuous Integration

Ernst & Young GmbH

Berlin, Germany

DevOps

Machine Learning

May Business Consulting

Municipality of Madrid, Spain

Intermediate

API

ETL

GIT

Azure

Flask

+7

Medis Medical Imaging

Leiden, Netherlands

Senior

Bash

Azure

DevOps

Python

Grafana

+9